Hi,

I am training Lenet-5 with MRI data, the fitting data is the slice of MRI (2D).

I used DataLoader:

train_loader = DataLoader(data_train,

batch_size=options.batch_size,

shuffle=options.shuffle,

num_workers=32,

drop_last=True,

pin_memory=True)

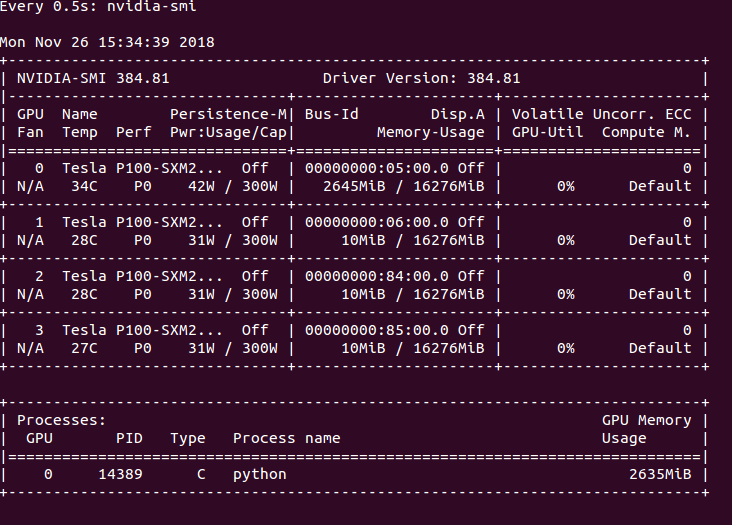

I choose to use GPU to train my model. I think the way that I configure the model is correct. However, the training is super slow. I can take the high GPU memory but quite low volatile cpu-util:

I have read related questions which have the similar problems. Here is the time to load every batch of data:

or batch 0 slice 0 training loss is : 2.023055

For batch 0 slice 0 training accuracy is : 0.437500

Time 561.966 (561.966)

The group true label is tensor([1, 1, 0, 0, 1, 0, 0, 1, 0, 0, 0, 1, 1, 0, 0, 1, 0, 1, 0, 1, 1, 0, 0, 0,

0, 0, 0, 1, 0, 1, 0, 0], device=‘cuda:0’)

output.device: cuda:0

ground_truth.device: cuda:0

For batch 1 slice 0 training loss is : 4.705386

For batch 1 slice 0 training accuracy is : 0.437500

Time 18.963 (290.464)

The group true label is tensor([0, 0, 1, 1, 0, 0, 0, 1, 0, 0, 0, 0, 1, 1, 0, 0, 0, 1, 0, 0, 0, 1, 1, 0,

1, 1, 0, 1, 0, 1, 0, 1], device=‘cuda:0’)

output.device: cuda:0

ground_truth.device: cuda:0

For batch 2 slice 0 training loss is : 2.769625

For batch 2 slice 0 training accuracy is : 0.468750

Time 18.198 (199.709)

It takes so long to load the data… Any ideas? Actually, I manually did several preprocessing for the 2D slices, like create a fake RGB and resize the images… I dont know if it is because of these image preprocessing steps?

Best

Hao