Problem

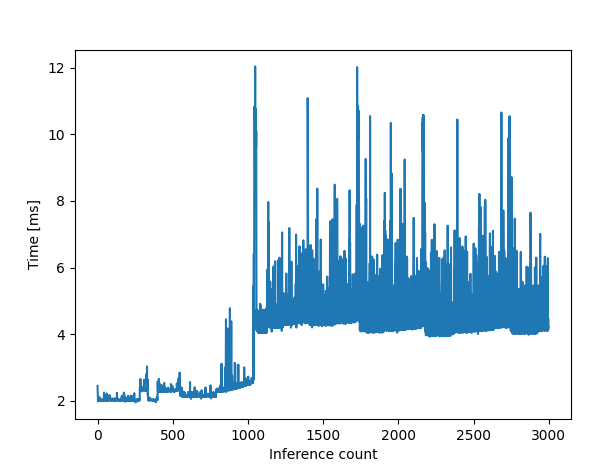

I have a resnet that I want to apply in a loop for a real time application. During deployment I noticed that the time it takes for applying my model is very inconsistent. At first it only takes ~2ms but after some time it sometimes even spikes up to ~12ms.

Example

To Reproduce

I already manged to narrow down the specific circumstances:

- This only happens if I simultaneously load data from my hard drive.

- It happened on multiple windows systems but not on linux.

- The latency comes from moving my tensor to(‘cuda’) and from cuda to(‘cpu’)

I wrote a minimal example:

from time import perf_counter_ns

import torch

import cv2 as cv

import matplotlib.pyplot as plt

import numpy as np

model = torch.hub.load('pytorch/vision:v0.10.0', 'resnet18', pretrained=True)

model.eval()

model = model.to("cuda")

tensor = torch.Tensor(np.random.rand(1, 3, 224, 224).astype(np.float32))

torch.cuda.synchronize()

timestamps = []

for x in range(3000):

start = perf_counter_ns()

model(tensor.to("cuda")).to("cpu")

d_t = perf_counter_ns() - start

timestamps.append(d_t)

cv.imread(r"path/to/some/image.png")

plt.plot(np.array(timestamps[1:]) * 1e-6)

plt.xlabel("Inference count")

plt.ylabel("Time [ms]")

plt.show()

System Information

- os: win11

- gpu: rtx 4070 ti

- python: 3.11.7

- torch: 2.1.2+cu118

- torchvison: 0.16.2+cu118