Hello all,

I train a simple RNN network to predict a label on each input timestep on a huge random dataset.

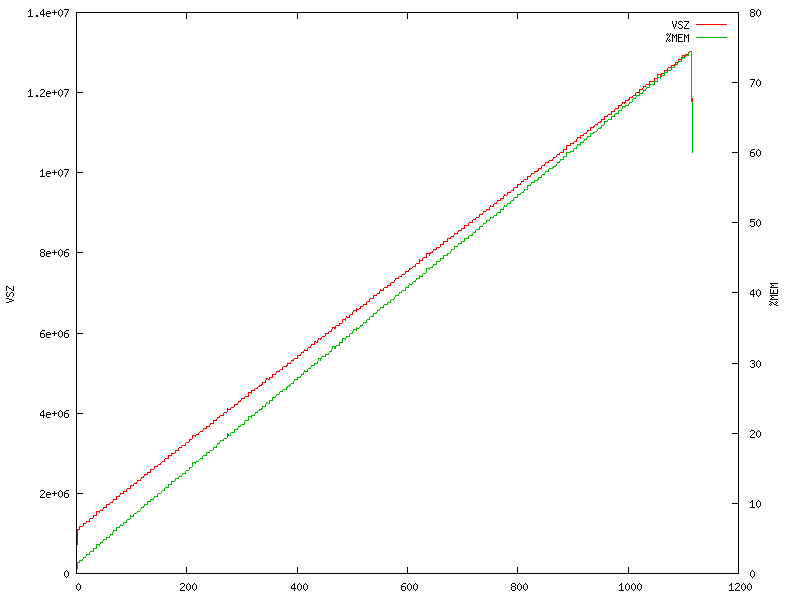

I record memory usage while training, and notice that it is increasing linearly with dataset size:

(VSIZE = Virtual Memory recorded by Ubuntu, %MEM: How much % RAM it takes, x-axis = time in second)

My training script for reference:

class testNet(nn.Module):

def __init__(self):

super(testNet, self).__init__()

self.rnn = nn.RNN(input_size=200, hidden_size=1000, num_layers=1)

self.linear = nn.Linear(1000, 100)

def forward(self, x, init):

x = self.rnn(x, init)[0]

y = self.linear(x.view(x.size(0)*x.size(1), x.size(2)))

return y.view(x.size(0), x.size(1), y.size(1))

net = testNet()

init = Variable(torch.zeros(1, 4, 1000))

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(), lr=0.01, momentum=0.9)

total_loss = 0.0

for i in range(10000): #10000 mini-batch

input = Variable(torch.randn(1000, 4, 200)) #Seqlen = 1000, batch_size = 4, feature = 200

target = Variable(torch.LongTensor(4, 1000).zero_())

optimizer.zero_grad()

output = net(input, init)

loss = criterion(output.view(-1, output.size(2)), target.view(-1))

loss.backward()

optimizer.step()

total_loss += loss[0]

print(total_loss)

I expect memory usage not increasing per mini-batch. What might be the problem? (Correct me if my script is wrong)