Hi

I am doing some task very similar to translation

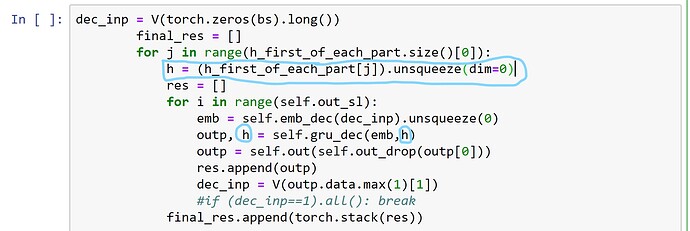

there is two nested for loop in picture below

in j = 0

I assigned some thing to h

and doing some thing in inner loop

then

in in j = 1

I assigned some thing else to h , and …

doesn’t j=1 step remove the history of gradients for j=0 (for back propagation time )??

if it is the case , how can i fix it ?