So, for example, if I just feed x, y coordinates at consecutive timesteps to an encoder and try to predict future x, y coordinates using a decoder, how confident can I be of the learning ability of this RNN? Also, to aid the RNN, let’s say I add some supporting information but I only add it in the decoder stage and not the encoder stage, then how good can my RNN be at learning good weights to do a prediction of x, y coordinates?

The reason I am asking this is because I see a lot of papers where, to predict spatial coordinates, the authors just feed raw x, y coordinates to the RNN encoder. But I think that may be a bit naive even if the encoded representation is supported by additional information (like some features extracted from the images) because just feeding a bunch of raw numbers at the encoder stage without any other additional ‘contextual’ information might mean that the encoder just learns something trivial like a constant-velocity Kalman filter (even after backprop from later on where more information is added in).

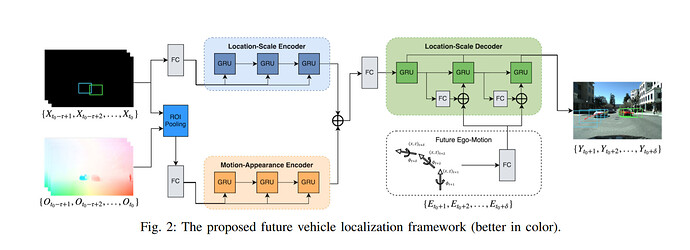

For example, the image is from the paper: https://arxiv.org/pdf/1809.07408.pdf

Look at the part of Location-Scale Encoder where they just feed the bounding box coordinates at each time step of the encoder. Isn’t it a bad idea to just feed numerical values like this with no other contextual information? I mean, even though they later combine the encoded representation with image information but I think this part of the architecture might not get trained appropriately.

However, if a lot of researchers seem to be doing this then that means I am not seeing something. What do you guys think?