Example:

import torch

import torch.nn.functional as F

z = torch.tensor([3, -1, 2.])

y=torch.tensor([1,0,2]).float()

yhat = F.binary_cross_entropy_with_logits(z, y, reduction='none')

print(yhat)

Out:

tensor([ 0.0486, 0.3133, -1.8731])

Can you clarify why PyTorch supports negative entropy solutions?

You are passing the targets, which are out of bounds as the target should be a probability. From the docs:

target: Tensor of the same shape as input with values between 0 and 1

1 Like

I know it is something strange. How target should be probability? I haven’t found this word in the docs

What is the meaning if target is 0.5?

A target value of 0.5 would indicate that the sample belongs to both classes with the same weight.

You can interpret the target values as probabilities as they are supposed to be in [0, 1] or as any weights if it fits your understanding better. In any case, you should not pass targets out of this range as this would yield invalid losses such as your negative one.

Is this the case when I do image matting and when some pixels do have alpha channel?

I don’t fully understand the issue with the alpha channel. Wouldn’t your target be a simple mask or are you adding the alpha channel to the (binary) mask as well? In the latter case, wouldn’t it be possible to let the alpha values be in [0, 1] (same as the mask values)?

I am currently running your U-net from the git repo to do image matting. While I do that I am tinkering.

The tiny dataset I am using has mask images with either zeros or ones. No alpha channel. I am in the comfort zone with the targets this way since I used CE.

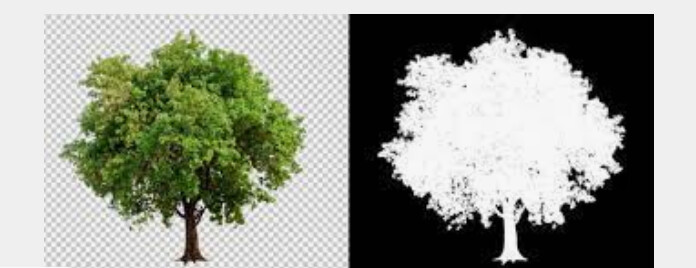

But I evaluated using BCE and CE and the advantage of BCE would be the case there there is alpha channel in the image (like the one on the left - full opacity is 1.0)

I can just imagine the case where I have transparent mask images with pixels where alpha is in between zero and one at some pixels. This way the matting may look even better comparing to the coarse zero and ones.

This corresponds to the targets with alpha you are mentioned. But then, if you use argmax, how would you predict these subtle foreground edges BCE trains?

You would not use argmax in this case, since nn.BCEWithLogitsLoss is used for a multi-label classification use case, where a threshold is used on the logits.

If you want to work on a multi-class segmentation, use nn.CrossEntropyLoss, but this would not fit your use case predicting the alpha channel additionally to the pixel class label.

OK, it took me some time but now I see the image matting task is different than semantic segmentation in so much that some pixels may belong to foreground as well as background (partial or mixed pixels).