Hi, All.

I have trained a classification network that classifies into 10 classes.

After saving the model, I loaded it again and attached another FC layer (10-input, 1-output).

Why are there 10 outputs?

I need one output.

What’s wrong to me?

Hi, All.

I have trained a classification network that classifies into 10 classes.

After saving the model, I loaded it again and attached another FC layer (10-input, 1-output).

Why are there 10 outputs?

I need one output.

What’s wrong to me?

Hi,

Could you give more details on what is the forward method of your model and what is the input x?

Hi, @albanD

Input X is images:

torch.Size([1, 3, 224, 224])

my trained model is the CNN network:

ResNet(

(conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(layer1): Sequential(

(0): BasicBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(1): BasicBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer2): Sequential(

(0): BasicBlock(

(conv1): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(downsample): Sequential(

(0): Conv2d(64, 128, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): BasicBlock(

(conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer3): Sequential(

(0): BasicBlock(

(conv1): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(downsample): Sequential(

(0): Conv2d(128, 256, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): BasicBlock(

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer4): Sequential(

(0): BasicBlock(

(conv1): Conv2d(256, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(downsample): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): BasicBlock(

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(avgpool): AvgPool2d(kernel_size=7, stride=1, padding=0)

(fc): Linear(in_features=512, out_features=10, bias=True)

(fc2): Linear(in_features=10, out_features=1, bias=True)

)

Thanks.

Thanks,

What about the forward method? How do you use fc and fc2? Do you do anything with the output before returning it?

Hi, @albanD,

My Goal is that Converting Classification network to Regression network.

So, I already train the Classification CNN using Fine Tune, and then Removing Classification header(FC) and adding Regression header(1-layer FC, 10-to-1).

But It’s not easy… Help me.

Thanks in advance~

Hi,

You need to change the forward method of your network. So that it uses the new fully connected layer.

What you printed above is just the list of all the parameters of your Module. To use these parameters, you need to make sure they are used in the forward function of this module.

For example, here is the original forward method for a resnet as it is defined in torchvision. To use a new layer, you would need to subclass this one and change the forward method to use what you want to use.

Hi,

You need to change the forward method of your network. So that it uses the new fully connected layer.

How Can ?

What you printed above is just the list of all the parameters of your Module.

To use these parameters, you need to make sure they are used in the forward function of this module.

For example, here 1 is the original forward method for a resnet as it is defined in torchvision. To use a new layer, you would need to subclass this one and change the forward method to use what you want to use.

For example?

Thanks.

Here is a script that does it:

import torch

import torchvision

from torchvision.models.resnet import BasicBlock

class MyResNet(torchvision.models.ResNet):

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

x = x.view(x.size(0), -1)

x = self.fc(x)

# We add to the original net

x = self.fc2(x)

return x

my_resnet = MyResNet(BasicBlock, [2, 2, 2, 2], num_classes=10)

my_resnet.fc2 = torch.nn.Linear(10, 1)

inp = torch.rand(1, 3, 224, 224)

out = my_resnet(inp)

print(out.size())

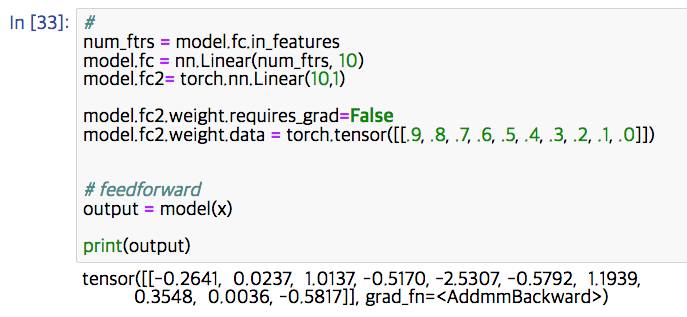

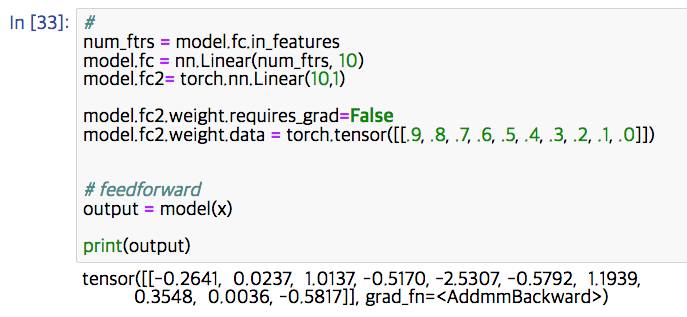

Thanks, @albanD

I want to fix the final fc layer’s weights with my 10 values (0.0 ~0.9).

How Can ?

from @bemorept

then you can fill in the weights and bias of the fc2 layer and disable gradients for them:

my_resnet.fc2 = torch.nn.Linear(10, 1)

my_resnet.fc2.weight.requires_grad = False

my_resnet.fc2.bias.requires_grad = False

my_resnet.fc2.weight.copy_(your_custom_weights)

my_resnet.fc2.bias.copy_(your_custom_bias)

# If you don't have bias you can do my_resnet.fc2.bias.fill_(0)