I am wondering how I can test the trained model for semantic segmentation and visualise the mask for the test image. There is an example for classification problem in Pytorch but couldn’t find any obvious example for the segmentation.

I found this page that test the network, but it’s for classification problem.

I did manipulate it for segmentation application like below but now sure am I doing it right?

model.eval()

total = 0

correct = 0

count = 0

#iterate through test dataset

for data in (test_loader):

t_image, mask = data

t_image, mask = t_image.to(device), mask.to(device)

with torch.no_grad():

outputs = model.forward(t_image)

ps = torch.exp(outputs)

_, predicted = torch.max(outputs.data, 1) # Find the class index with the maximum value.

# We can use the PyTorch.eq() function to do this, which compares the values in two tensors and if they match, returns a 1. If they don’t match, it returns a 0

# By summing the output of the .eq() function, we get a count of the number of times the neural network has produced a correct output,

# and we take an accumulating sum of these correct predictions so that we can determine the overall accuracy of the network on our test data set.

total += mask.size(0)

correct += predicted.eq(mask.data).sum().item()

count +=1

print("Accuracy of network on test images is ... {:.4f}....count: {}".format(100*correct/total, count ))

the first question is why it used only forward here outputs = model.forward(t_image)?

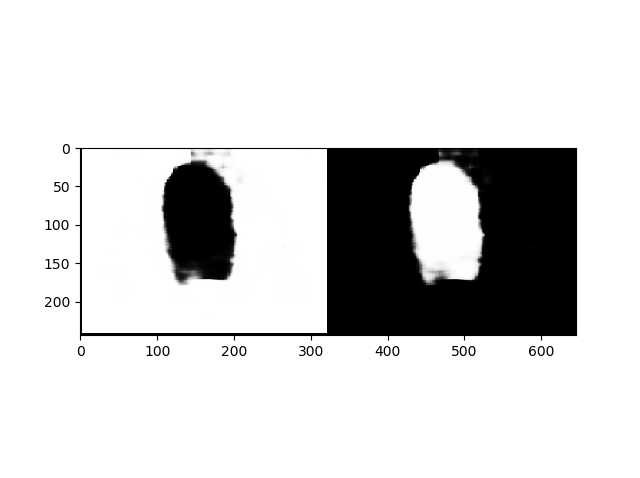

And the second question is how can I visualise the output of the test, for example, how can I display a test image and drow the mask for it based on my trained model? Is there any example for this? Thank you in advance.