Hello Team, it is my code.

I’m using Jit generated model with Libtorch in C\C++ in Ubuntu 18.04, with CUDA 10.2

I get correct result form my first call result = mE.forward(x);

torch::Tensor tensor_image = ...

torch::IValue result; // it is standard return of forward() in JIT traced model

std::vector<torch::jit::IValue> x;

x.push_back(tensor_image);

result = mE.forward(x);

std::cout << sp_and_gl_output_CUDA << std::endl;

std::vector<torch::IValue> in_to_G;

in_to_G.push_back(result);

img_tensor = mG.forward(in_to_G);

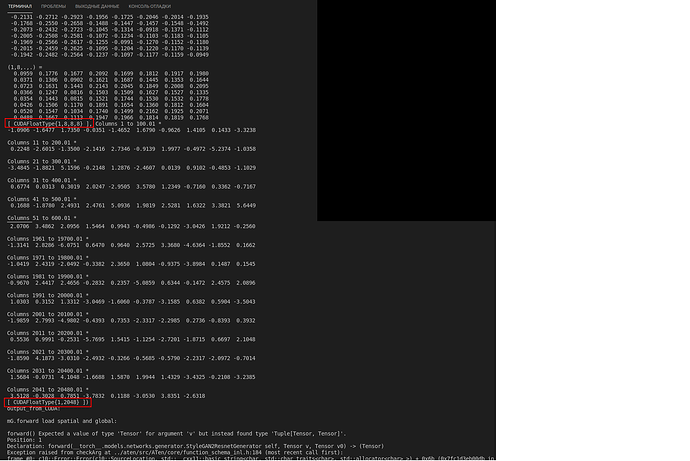

On the image print of result variable, returned by forward() method.

std::cout << sp_and_gl_output_CUDA << std::endl;

The result contained, two Tensors, but i can’t to pass it in next forward(), in right form.

The result of execution:

mG.forward load spatial and global:

forward() Expected a value of type 'Tensor' for argument 'v' but instead found type 'Tuple[Tensor, Tensor]'.

Position: 1

Declaration: forward(__torch__.models.networks.generator.StyleGAN2ResnetGenerator self, Tensor v, Tensor v0) -> (Tensor)

Exception raised from checkArg at ../aten/src/ATen/core/function_schema_inl.h:184 (most recent call first):

frame #0: c10::Error::Error(c10::SourceLocation, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >) + 0x6b (0x7f1c5d0290db in /home/interceptor/Документы/Git_Medium_repo/Binary_search_engine_CUDA/torch_to_cpp/libtorch/lib/libc10.so)

frame #1: c10::detail::torchCheckFail(char const*, char const*, unsigned int, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&) + 0xce (0x7f1c5d024d2e in /home/interceptor/Документы/Git_Medium_repo/Binary_search_engine_CUDA/torch_to_cpp/libtorch/lib/libc10.so)

frame #2: <unknown function> + 0x128d542 (0x7f1c473af542 in /home/interceptor/Документы/Git_Medium_repo/Binary_search_engine_CUDA/torch_to_cpp/libtorch/lib/libtorch_cpu.so)

frame #3: <unknown function> + 0x12918c1 (0x7f1c473b38c1 in /home/interceptor/Документы/Git_Medium_repo/Binary_search_engine_CUDA/torch_to_cpp/libtorch/lib/libtorch_cpu.so)

frame #4: torch::jit::GraphFunction::operator()(std::vector<c10::IValue, std::allocator<c10::IValue> >, std::unordered_map<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, c10::IValue, std::hash<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > >, std::equal_to<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > >, std::allocator<std::pair<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const, c10::IValue> > > const&) + 0x2d (0x7f1c49c97b2d in /home/interceptor/Документы/Git_Medium_repo/Binary_search_engine_CUDA/torch_to_cpp/libtorch/lib/libtorch_cpu.so)

frame #5: torch::jit::Method::operator()(std::vector<c10::IValue, std::allocator<c10::IValue> >, std::unordered_map<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, c10::IValue, std::hash<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > >, std::equal_to<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > >, std::allocator<std::pair<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const, c10::IValue> > > const&) const + 0x175 (0x7f1c49ca7235 in /home/interceptor/Документы/Git_Medium_repo/Binary_search_engine_CUDA/torch_to_cpp/libtorch/lib/libtorch_cpu.so)

frame #6: <unknown function> + 0x6266 (0x564143038266 in ./particle_cuda_test)

frame #7: <unknown function> + 0x47af (0x5641430367af in ./particle_cuda_test)

frame #8: __libc_start_main + 0xe7 (0x7f1bf9682bf7 in /lib/x86_64-linux-gnu/libc.so.6)

frame #9: <unknown function> + 0x4daa (0x564143036daa in ./particle_cuda_test)

Main module: torch_and_cuda_call.cpp completed.

ok!