Hi, Everyone!

I’m attempting to optimize computation graph in Python with Glow. I have CPU and GPU devices. So,

- I want to get ops schedule that Glow creates and also I want to change it;

- I want to obtain kernel fusing that Glow builds and I want to manage it;

- I want to see where each operation is performed (on CPU or on GPU) and I want to control it;

This is what I tried to do. I took a simple computation graph from https://github.com/pytorch/glow/blob/master/torch_glow/examples/basic_example.py :

def foo(a, b):

** c = a.mul(b)**

** a = c.mul(c)**

** a = c.mul(a)**

** d = c.div(a)**

** return d**

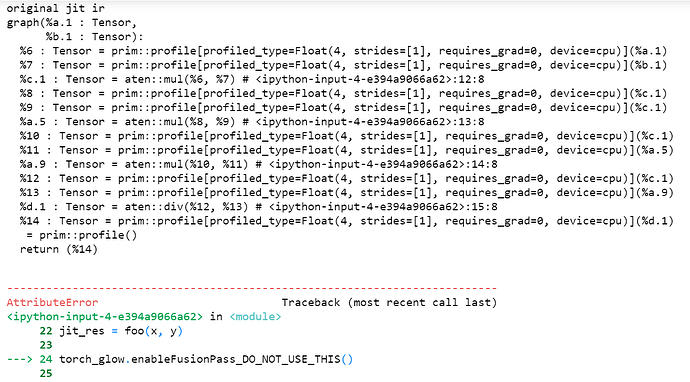

I got (as I understood) original schedule and tensor placement (without Glow) of this graph, like on screen, by this line:

foo.graph_for(x, y)

But when I launch Glow (as I understood, it is launched by the line torch_glow.enableFusionPass_DO_NOT_USE_THIS()) I obtain error:

module ‘glow.torch_glow’ has no attribute ‘enableFusionPass_DO_NOT_USE_THIS’

Please, tell me how I can find out at least something about 1), 2) or 3).

I would be grateful for any advice because I’m new to Glow:)