Hi,

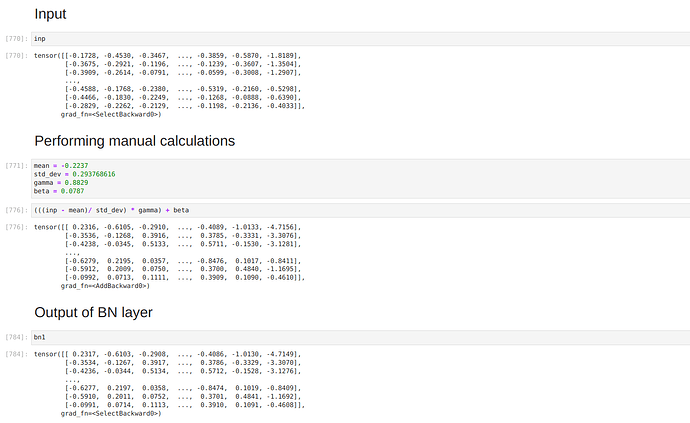

How can I get the mean, variance, gamma and beta for batchnorm from a saved CNN model?

Also, how can I use them for inference?

This is my architecture:

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 32, 32, 32] 896

BatchNorm2d-2 [-1, 32, 32, 32] 64

ReLU-3 [-1, 32, 32, 32] 0

Dropout-4 [-1, 32, 32, 32] 0

Conv2d-5 [-1, 64, 32, 32] 18,496

BatchNorm2d-6 [-1, 64, 32, 32] 128

ReLU-7 [-1, 64, 32, 32] 0

AvgPool2d-8 [-1, 64, 16, 16] 0

Conv2d-9 [-1, 128, 16, 16] 73,856

BatchNorm2d-10 [-1, 128, 16, 16] 256

ReLU-11 [-1, 128, 16, 16] 0

Dropout-12 [-1, 128, 16, 16] 0

Conv2d-13 [-1, 128, 16, 16] 147,584

BatchNorm2d-14 [-1, 128, 16, 16] 256

ReLU-15 [-1, 128, 16, 16] 0

AvgPool2d-16 [-1, 128, 8, 8] 0

Conv2d-17 [-1, 256, 8, 8] 295,168

BatchNorm2d-18 [-1, 256, 8, 8] 512

ReLU-19 [-1, 256, 8, 8] 0

Dropout-20 [-1, 256, 8, 8] 0

Conv2d-21 [-1, 256, 8, 8] 590,080

BatchNorm2d-22 [-1, 256, 8, 8] 512

ReLU-23 [-1, 256, 8, 8] 0

AvgPool2d-24 [-1, 256, 4, 4] 0

Flatten-25 [-1, 4096] 0

Linear-26 [-1, 32] 131,104

ReLU-27 [-1, 32] 0

Linear-28 [-1, 10] 330

================================================================

Total params: 1,259,242

Trainable params: 1,259,242

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.01

Forward/backward pass size (MB): 5.38

Params size (MB): 4.80

Estimated Total Size (MB): 10.19

----------------------------------------------------------------