Hi,

Each input sample is a 2D tensor of 50x16 (i.e. 50 vectors, each of length 16).

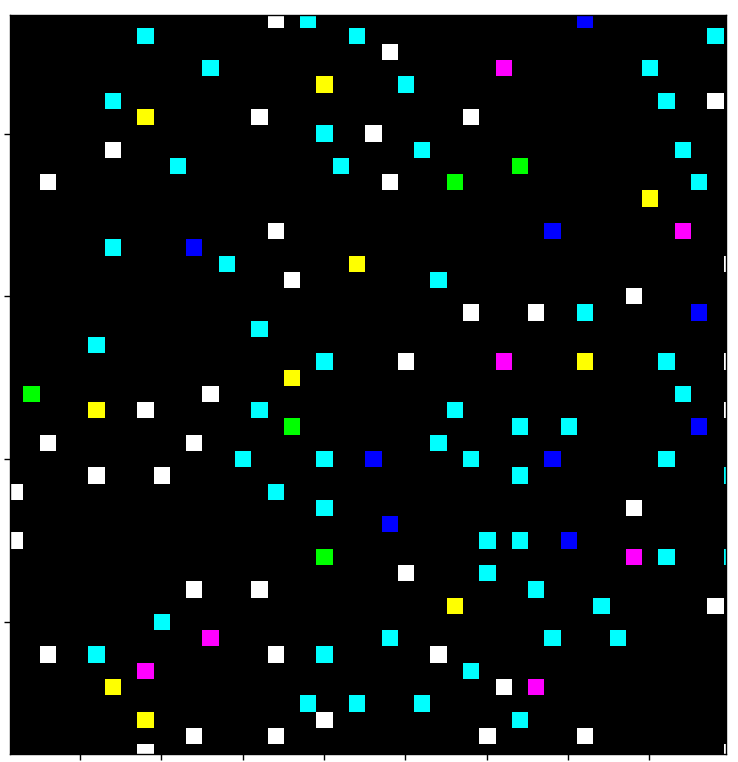

In the forward pass these inputs have their widths contracted (e.g. from 16 to 3) by a series of convolutional layers. The resultant shape is 50x3, where the 3 elements in each of the 50 vectors are RGB values.

However the bit I am seriously questioning is in my forward pass when I go on to take these vectors and embed them into a 500x500x3 tensor, initially full of zeros (like an image), will the autograd gradients know what just happened??

Afterwards I finally want to apply some more standard convolutions on this new image tensor to classify it.

My question is if I cut and slice vectors out of the first input and embed them into this new spatial arrangement, will Pytorch be able to track the gradients through the chopping and changing?

If you know the correct way to code this please do let me know ![]()

![]()

## Some Pseudo Code to give a flavour of what I'm doing

data = torch.randn((2, 1, 50, 16), requires_grad=True) # (batch_size, channels, num_vectors, vector_length) || (n, 50, 16)

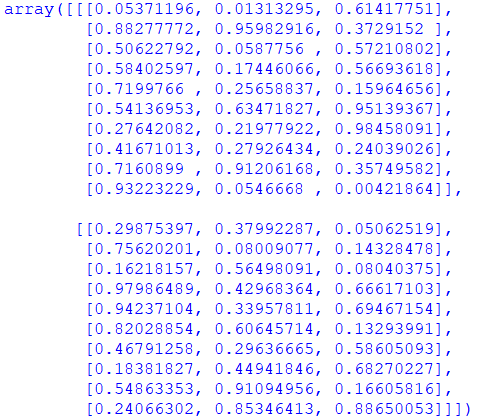

centres = torch.randn((2, 1, 50, 2), requires_grad=True) # (batch_size, channels, num_vectors, centres) || (n, 50, 2)

## scale centre coordinate values up from range of [0,1] to [0, 500]

centres = torch.mul(centres, 500)

input = torch.cat((data, centres), 2)

class Model(torch.nn.Module):

def __init__(self):

self.layer1 = torch.nn.Conv2d(in_channels=1, out_channels=100, kernel_size=(1, 16), stride=1, padding=0)

self.layer2 = torch.nn.Conv2d(in_channels=100, out_channels=100, kernel_size=1, stride=1, padding=0)

self.layer3 = torch.nn.Conv2d(in_channels=100, out_channels=3, kernel_size=1, stride=1, padding=0)

self.layer4 = torch.nn.Sequential(

torch.nn.Conv2d(in_channels=3, out_channels=100, kernel_size=2, stride=2, padding=2),

torch.nn.ReLU(),

)

def forward(self, x):

data = x[:, :, :, :16] # (1, 1, 50, 16) || (batch_size, depth, height, width)

centres = x[:, :, :, 16:] # (1, 1, 50, 2) || (batch_size, depth, height, width)

x = torch.nn.functional.relu(self.layer1(data)) # (1, 100, 50, 1) || (batch_size, depth, height, width)

x = torch.nn.functional.relu(self.layer2(x)) # (1, 100, 50, 1) || (batch_size, depth, height, width)

x = torch.nn.functional.relu(self.layer3(x)) # (1, 3, 50, 1) || (batch_size, depth, height, width)

x = x.permute(0, 3, 2, 1) # (1, 1, 50, 3) || (batch_size, depth, height, width)

## Peel apart the vectors in x, and embed them spatially in newly-defined zero matrices

spatial_matrices = 0

for batch_idx in range(n_batches):

spatialMatrix = torch.zeros((1, 500, 500, 3))

for vec_idx in range(x.size()[2]):

x_coordinate = centres[batch_idx, 0, vec_idx, 0]

y_coordinate = centres[batch_idx, 0, vec_idx, 1]

spatialMatrix[0, y_coordinate, x_coordinate, :] = x[batch_idx, 0, vec_idx, :]

if type(spatial_matrices) == int:

spatial_matrices = spatialMatrix

else:

spatial_matrices = torch.cat((spatial_matrices, spatialMatrix), dim=0)

x = self.layer4(x)

return x