Hi,

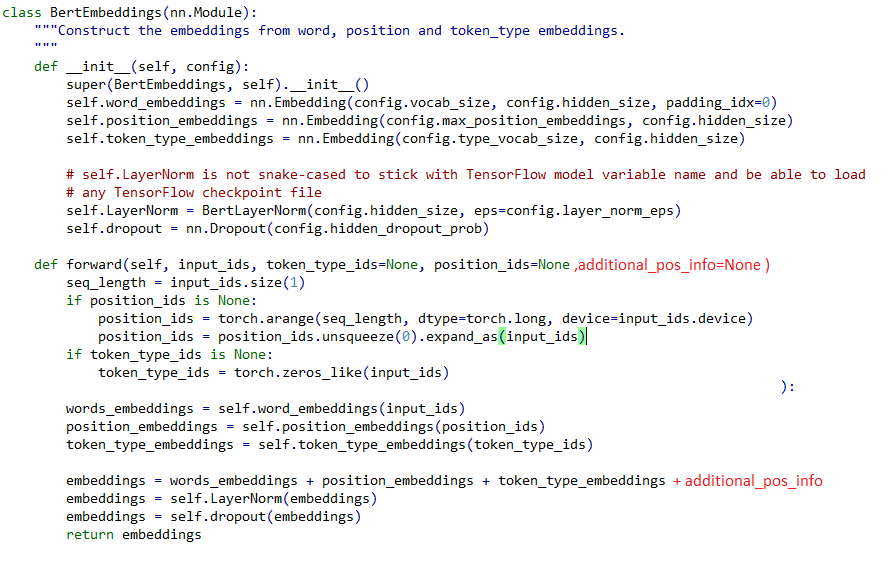

I want to modify the BERT embedding Module (from pytorch-transformers package) in the way that I can dynamically add a tensor ontop of the tokenembedding. The tensor contains additionally information that can’t be stored in an embedding layer, because it isnt dependend on the tokenposition or the token_id/vocab. I think the picture attached in the appendix describes my interest quite well (in red writing it is clarified how I would like to modify it).