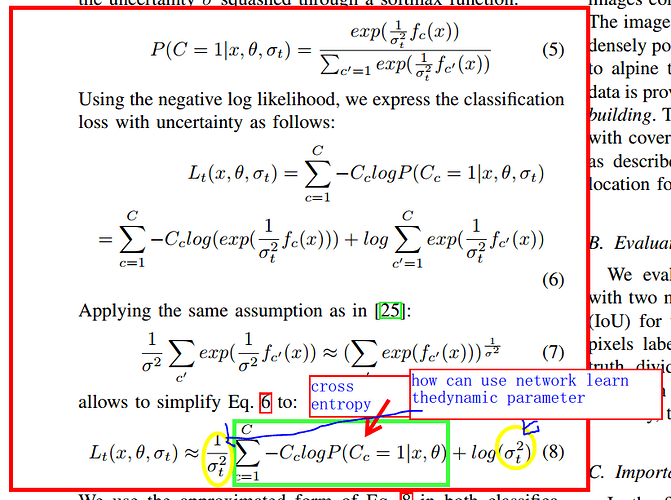

Hi any reason you can’t do gradient descent over the parameter as well? Say the optimizer over your network is opt, make another optimizer for the parameter in question:

opt_sig = torch.optim.SGD([sigma],lr=1e-1)

and whenever you do a optimizer step for your network, also do a step with opt_sig:

opt.zero_grad()

opt_sig.zero_grad()

loss.backward()

opt.step()

opt_sig.step()

It seems clear. Is this code snippet right?

myNet=myNet.to(device)

sig1=torch.tensor(1.0,requires_grad=True, device=device)

sig2=torch.tensor(1.0,requires_grad=True, device=device)

criterion=nn.CrossEntropyLoss()

optimizer=optim.Adam(myNet.parameters(),lr=lr,weight_decay = opt.weight_decay)

scheduler = optim.lr_scheduler.ReduceLROnPlateau(optimizer, 'min',patience=10)

opt_sig = torch.optim.SGD([sig1,sig2],lr=1e-4)

optimizer.zero_grad()

opt_sig.zero_grad()

outline,outvp=myNet(data)

lossline=(1.0/sig1.pow(2))*criterion(outline,mask)+torch.log(sig1.pow(2))

lossvp=(1.0/sig2.pow(2))*criterion(outvp,vpmask)+torch.log(sig2.pow(2))

loss=lossline+lossvp

loss.backward()

optimizer.step()

opt_sig.step()

Can update the model’s weight and the parameter sigma together with only one optimizer?

optimizer=optim.Adam([

{'params': myNet.parameters(), 'lr': lr},

{'params': [sig1,sig2], 'lr':1e-4}],

weight_decay = opt.weight_decay)

1 Like

Yes, this seems to verify your approach.