when use DistributedDataParallel

scaler = GradScaler()

for epoch in range(args.epochs):

model.train()

if num_distrib() > 1:

train_loader.sampler.set_epoch(epoch)

for i, (input, target) in enumerate(train_loader):

with autocast(): # mixed precision

output = model(input)

loss = loss_fn(output, target) # note - loss also in fp16

model.zero_grad()

scaler.scale(loss).backward()

scaler.step(optimizer)

scaler.update()

reduced_loss = reduce_tensor(loss, args.gpus)

losses.update(reduced_loss.item(), input.size(0))

scheduler.step()

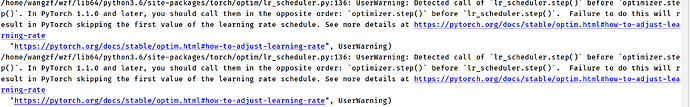

scheduler.step() has after scaler.step(optimizer) why appearance UserWarning

how can i do ?