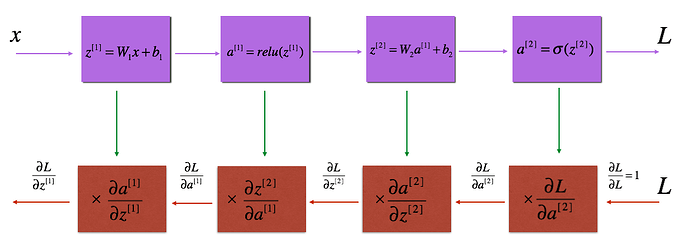

I’m trying to implement the GradCam paper which uses the gradient information flowing into the last convolutional layer of the CNN to assign importance values to each neuron for a particular decision of interest.

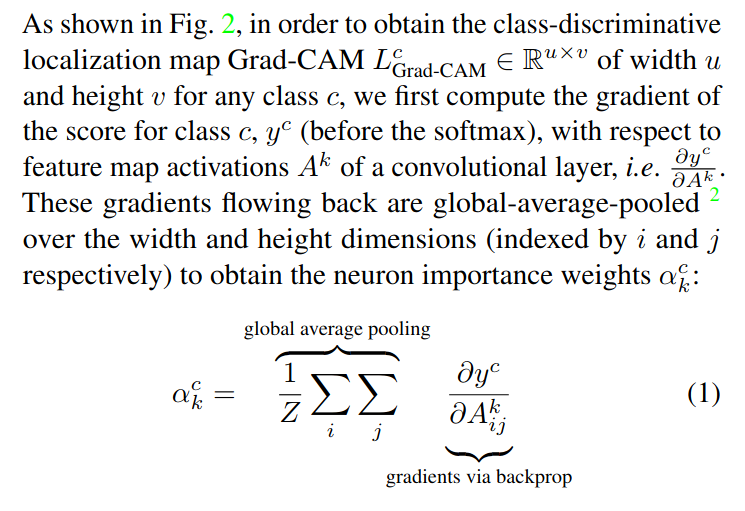

The paper says -

1. The first step to implementing GradCam would be obtaining the gradient wrt to the activation maps. My question is how do it obtain it? From what I’ve read online, we need hooks to get it. My question is: how is the value obtained using hooks different from the cnnlayer.weight.grad?

2. Now, let’s say we need hooks. How do we actually use hooks here? The docs say that there we can use the hooks on either tensors or modules. My question is, how are they different? Does a module refer to a single layer such as nn.Conv2d(...) or does it refer to the entire class shown below.

class CNNModel(nn.Module):

def __init__(self):

super(CNNModel, self).__init__()

self.cnn1 = nn.Conv2d()

self.cnn2 = nn.Conv2d()

# cnn_out gives a (1,n) output where n is the number of classes.

# We use cnn_out instead of a fc layer.

self.cnn_out = nn.Conv2d()

def forward(self, x):

x = self.cnn1(x)

x = self.cnn2(x)

out = self.cnn_out(x)

return out

ie. does module refer to CNNModel or nn.Conv2d()?

3. We can use hooks on a tensor by way of register_hook(). How do we use it? From what I’ve read online, we need to use hooks on a tensor to implement GradCAM. Let’s say my setup if the following -

I have a custom image classifier as follows. How do I use register_hook to obtain the gradients of self.cnn2? I’ve seen examples where register_hook is used inside the forward() method (Source). Sometime, people make a separate class out of it (Source (pg132)).

What is the correct way of doing it? Assume the trained model is stored in variable model as shown below.

class CNNModel(nn.Module):

def __init__(self):

super(CNNModel, self).__init__()

self.cnn1 = nn.Conv2d()

self.cnn2 = nn.Conv2d()

# cnn_out gives a (1,n) output where n is the number of classes.

# We use cnn_out instead of a fc layer.

self.cnn_out = nn.Conv2d()

def forward(self, x):

x = self.cnn1(x)

x = self.cnn2(x)

# we return a (1,10) output because we have 10 classes in MNIST.

out = self.cnn_out(x)

return out

# Let's assume this model has been trained on MNIST (28 x 28 images)

model = CNNModel()

# Obtain prediction for a single image

pred = model(single_test_img)

What do we do after we obtain the pred. Can you please show that by completing the pseudo-code I’ve written. Like, where does register_hook (define and run it) and everything fit in while we try to obtain the gradient mentioned above (at the top)?

4. Can we use register_backward_hook() or register_forward_hook() somehow? What I mean is, can these 2 functionalities by used to get the same output as register_hook()?

Can you give a small example of how we use register_forward_hook() using the CNNModel class I defined above?

I’ve also seen the docs say that register_backward_hook() is buggy, so we are advised not to use it.

I am confused by how hooks work. Hooks in PyTorch do not receive a lot of attention (docs or blogs). Please feel free to answer individual chunks, I’ll follow up in the comments. ![]()