hi, I’m currently trying to custom build pytorch. But cuda doesn’t seem to be provided statically. How can I include cuda and cudnn statically?

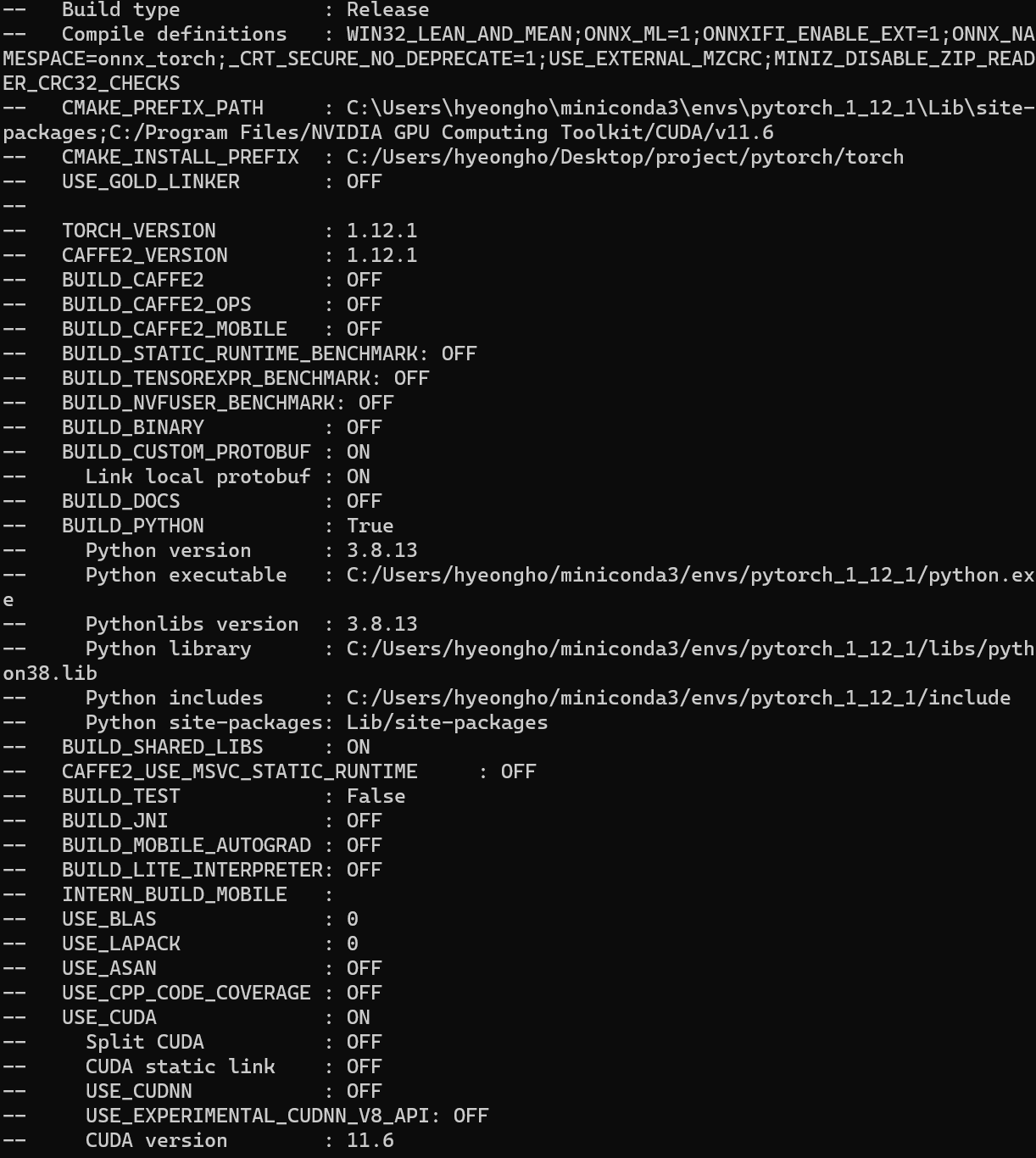

I have already turned on the following options

USE_CUDA=ON

USE_STATIC_CUDNN=ON

USE_CUDNN=ON

CAFFE2_STATIC_LINK_CUDA

my option

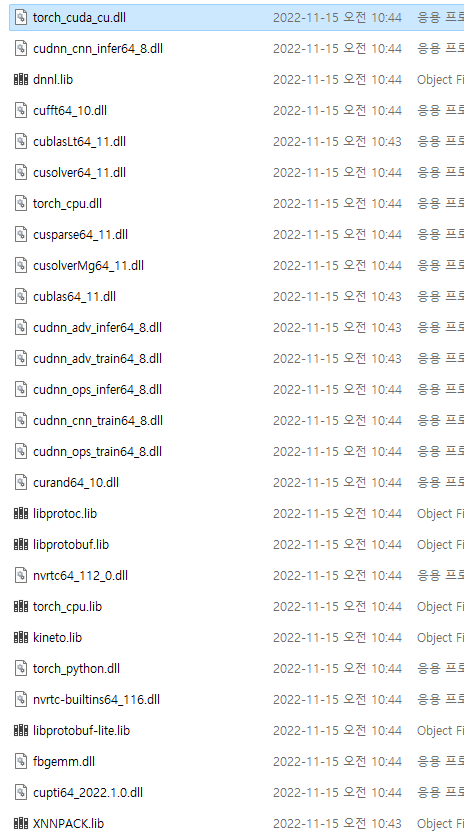

I want the following files to be included in my whl file (only for TORCH_CUDA_ARCH_LIST=8.6)

but my