-

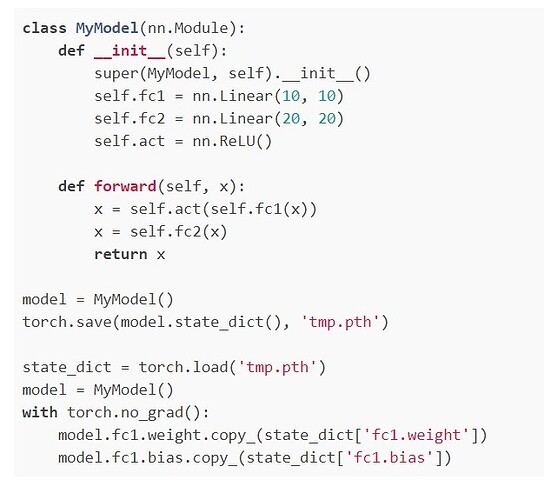

i tried copying weight manually from the state dictionary however I am only able to load a single layer

-

then i thought of manually adding dictionary words but the process is impractical

If some layer names are equal, you could load these parameters via load_state_dict(..., strict=False).

class MyModel(nn.Module):

def __init__(self):

super(MyModel, self).__init__()

self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3, bias=False)

self.new_layer = nn.Conv2d(64, 64, 3, 1, 1)

def forward(self, x):

x = self.conv1(x)

x = self.new_layer(x)

return x

ref_model = models.resnet152()

model = MyModel()

model.load_state_dict(ref_model.state_dict(), strict=False)

# compare

print((model.conv1.weight == ref_model.conv1.weight).all())

> tensor(True)

Note that strict=False will ignore all incompatible keys and you should double check, that the wanted parameters were indeed copied.