hey all. i’ve exported a pt file to an engine file for better inference speed to use it on yolov5

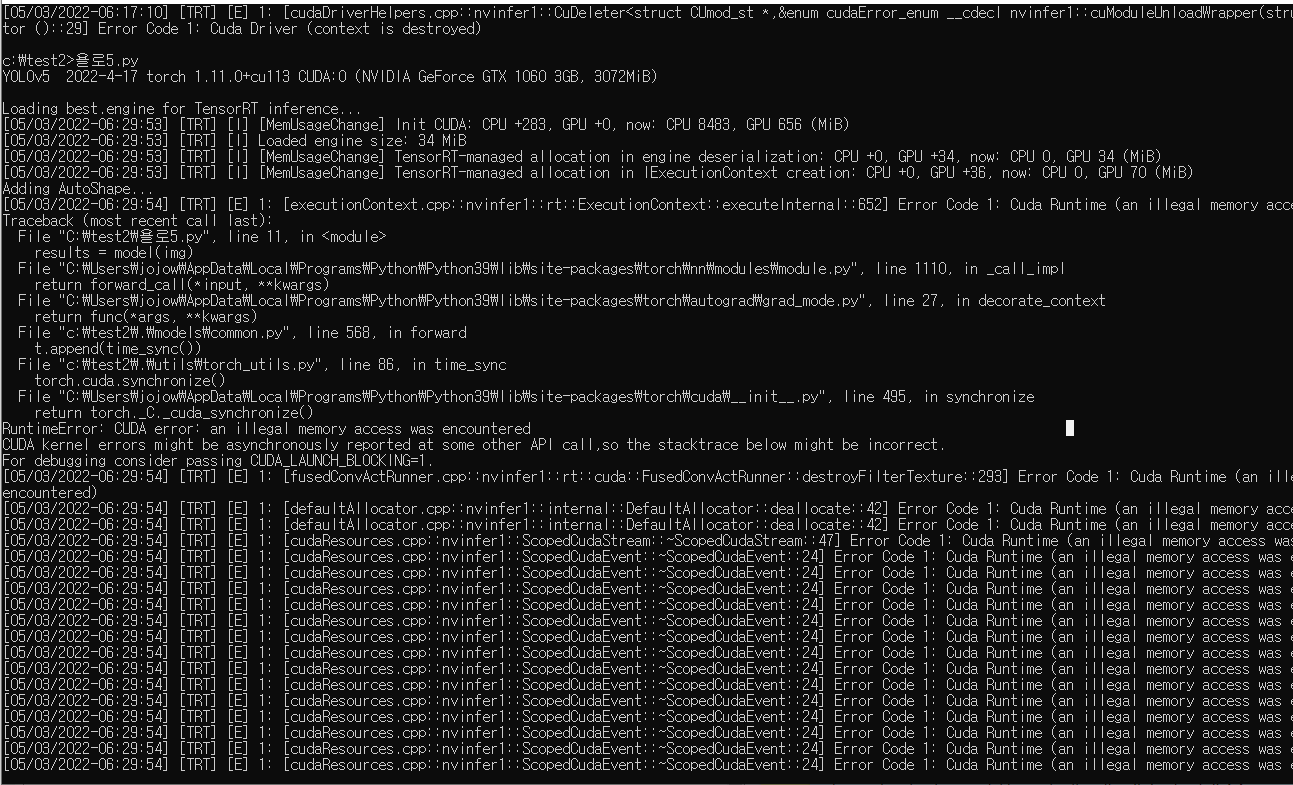

but after loading the model, i get this error msg when trying to inference.

can anyone tell me what im missing and what should i do :S? (i’d also appreciate it if you could give me an easy example to follow!)

import torch

# Model

#model = torch.hub.load('ultralytics/yolov5', 'yolov5s') # or yolov5m, yolov5l, yolov5x, custom

model = torch.hub.load('./', 'custom', path='./best.engine', source='local')

# Images

img = 'https://ultralytics.com/images/zidane.jpg' # or file, Path, PIL, OpenCV, numpy, list

# Inference

results = model(img)

# Results

results.print() # or .show(), .save(), .crop(), .pandas(), etc.

this is the code and heres the error msg