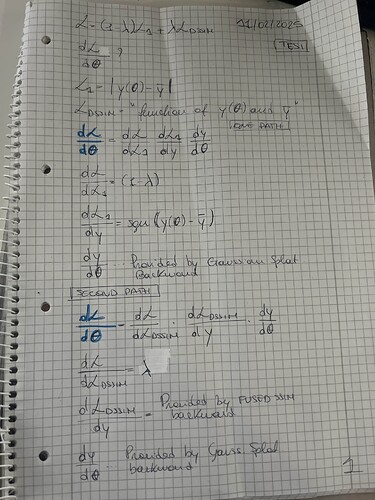

I’m trying to manually compute the gradients of the loss function at the top:

The loss is the combination of a L1 loss and L_{dssim} loss and both are function of my model’s prediction y(θ).

Therefore I have two ways of using the Chain Rule to calculate dL/dθ. As I have highlighted in blue. Which I imagine to be two parallel paths in the computational graph.

How do I combine them? – Or better, how does PyTorch do so I can replicate it?