Hello. This is my CustomDataSetClass:

class CustomDataSet(Dataset):

def __init__(self, main_dir, transform):

self.main_dir = main_dir

self.transform = transform

all_imgs = os.listdir(main_dir)

self.total_imgs = natsort.natsorted(all_imgs)

for file_name in self.total_imgs:

if '.txt' in file_name: self.total_imgs.remove(file_name)

if file_name == 'semantic': self.total_imgs.remove('semantic')

def __len__(self):

return len(self.total_imgs)

def __getitem__(self, idx):

img_loc = os.path.join(self.main_dir, self.total_imgs[idx])

image = Image.open(img_loc).convert("RGB")

tensor_image = self.transform(image)

return tensor_image

Here is how I create a list of datasets:

all_datasets = []

while folder_counter < num_train_folders:

#some code to get path_to_imgs which is the location of the image folder

train_dataset = CustomDataSet(path_to_imgs, transform)

all_datasets.append(train_dataset)

folder_counter += 1

Then I concat my datasets and create the dataloader and do the training:

final_dataset = torch.utils.data.ConcatDataset(all_datasets)

train_loader = data.DataLoader(final_dataset,

batch_size=batch_size,

shuffle=False,

num_workers=0,

pin_memory=True,

drop_last=True)

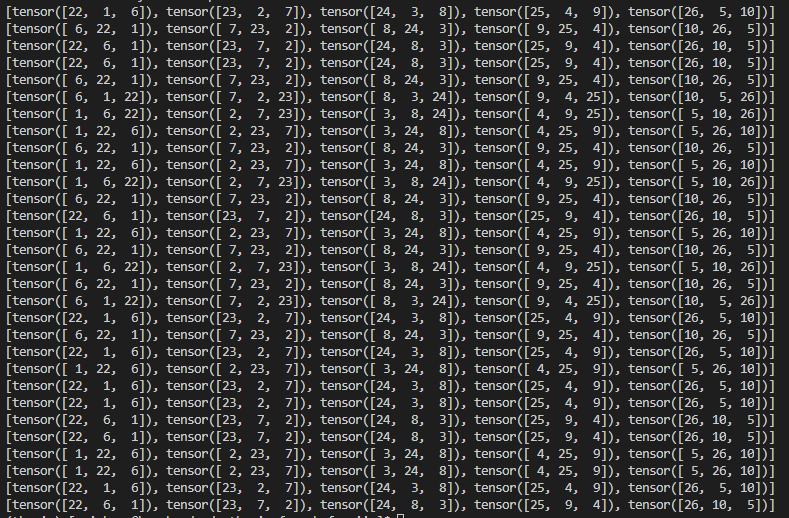

So, is the order of my data preserved? During training, will I go to each folder in theexact order that the concatenation was done and then grab all the images sequentially? For example:

I grab 150 images from folder 1, 100 images from folder 2 and 70 images from folder 3. I concatenate my the three datasets. During training I do:

for idx, input_seq in enumerate(data_loader):

#code to train

So, will the dataloader go through folder 1 and grab all the images inside there sequentially and then go to folder 2 and do the same and finally go to folder 3 and do the same as well? I tried reading the code for ConcatDataset but I can’t understand whether the order of my data willl be preserved or not.