I am new to PyTorch and Neural Network in general. I am following this tutorial: A Gentle Introduction to torch.autograd — PyTorch Tutorials 2.2.0+cu121 documentation

There are two examples of differentiation using backward(): one for scalar and one for non-scalar variable.

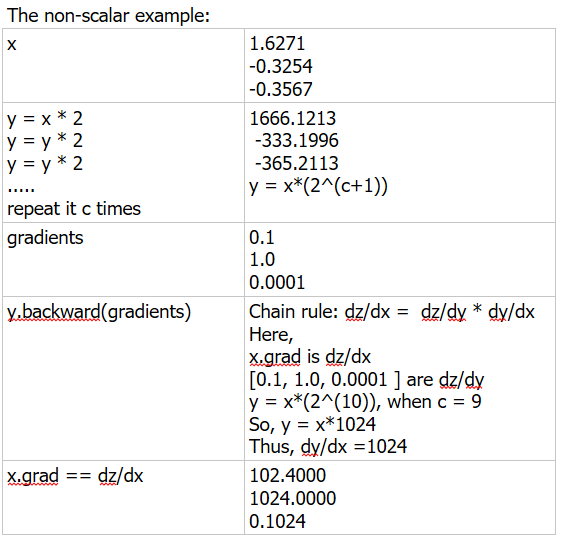

The scalar example:

imgur: the simple image sharer .png

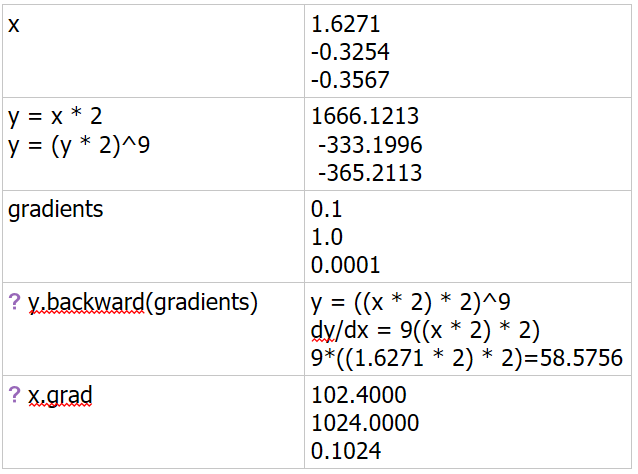

The non-scalar example:

x = torch.randn(3) # input is taken randomly

x = Variable(x, requires_grad=True)y = x * 2

c = 0

while y.data.norm() < 1000:

y = y * 2

c += 1gradients = torch.FloatTensor([0.1, 1.0, 0.0001]) # specifying gradient because input is non-scalar

y.backward(gradients)print( c )

print(x.grad)

Output:

9

102.4000

1024.0000

0.1024

I tried to understand it as I did for scalar example:

But, I can’t figure out how it works.

I get exact same output for different values of c regardless of the input values:

8

51.2000

512.0000

0.051210

204.8000

2048.0000

0.2048

I know the math behind getting 4.5000 4.5000 4.5000 4.5000 for scalar example (shown above). Can you explain the math behind getting 102.4000 1024.0000 0.1024 for non-scalar example. Please explain how it is calculating y.backward(gradients)

I have also asked this question on stackexchange, but no one seems to answer it: