I would like to ask about a tensor. Its operation [] , i.e., __getitem__ , supports backpropagation when indexing or slicing. However, I do not know how this is supported. Is there an overload or some code that can show how this operation supports both forward and backward propagation? I would like to know how it is registered and how different backward codes are overloaded under different conditions. Thank you.

supplement:

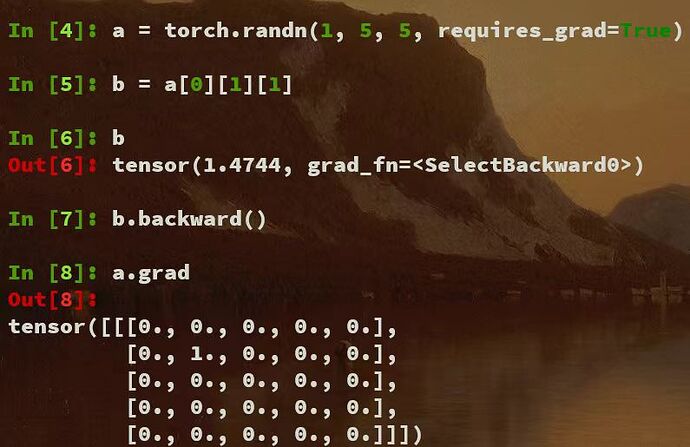

like this, we can know its grad_fn is selectBackward

but i don’t know why this situation a[0][1][1] ,the ‘’ will be select op in pytorch , who

register it?