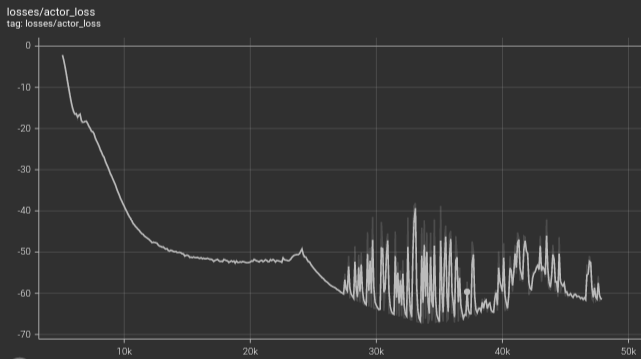

Here is the image of the loss value for actor in TD3. I am using an RNN kind of layer with MLP. The loss seems to do some kind of jiggling after 25k steps and the expected rewards are also not increasing much. How should I decrease the learning rate so that the loss should smoothly converge?