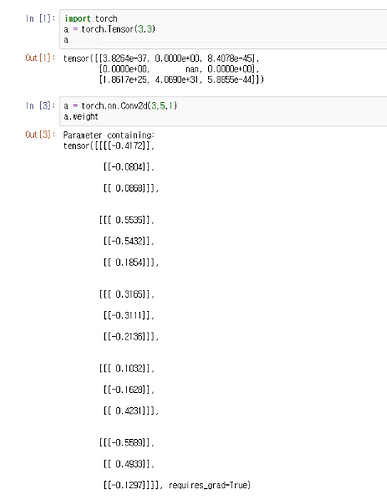

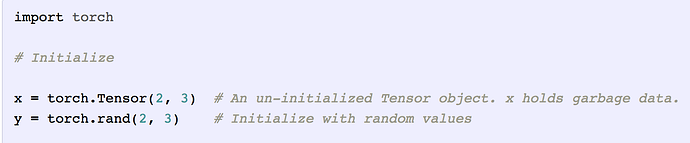

So if you use torch.Tensor it does not initialize it but puts some garbage. If you want some sort of random initialization, you should use torch.rand

>>> torch.rand(4,4)

tensor([[0.8693, 0.5824, 0.3661, 0.1016],

[0.4629, 0.7107, 0.1525, 0.9696],

[0.0603, 0.8134, 0.3207, 0.8813],

[0.0799, 0.1383, 0.3611, 0.3585]])

>>> torch.Tensor(4,4)

tensor([[-0.0000, 0.0000, -0.0000, 0.0000],

[ 0.0000, 0.0000, 0.0000, 0.0000],

[-0.0000, 0.0000, -0.0000, 0.0000],

[ 0.0000, 0.0000, 0.0000, 1.3564]])

>>> torch.Tensor(4,4)

tensor([[-0.0000, 0.0000, -0.0000, 0.0000],

[ 0.0000, 0.0000, -0.0000, 0.0000],

[-0.0000, 0.0000, 0.0000, 0.0000],

[ 0.0799, 0.1383, 0.3611, 0.3585]])

>>> torch.Tensor(4,4)

tensor([[-0.0000, 0.0000, -0.0000, 0.0000],

[ 0.0000, 0.0000, -0.0000, 0.0000],

[-0.0000, 0.0000, 0.0000, 0.0000],

[ 0.0000, 0.0000, 0.0000, 1.3564]])

>>> torch.rand(4,4)

tensor([[0.9820, 0.4046, 0.1679, 0.9906],

[0.3627, 0.8029, 0.2323, 0.5281],

[0.2631, 0.0574, 0.6574, 0.3545],

[0.8812, 0.9527, 0.6162, 0.3369]])

But I can feel why you are confused because it looks as if it was randomly initialized.

This blog has a good writeup: