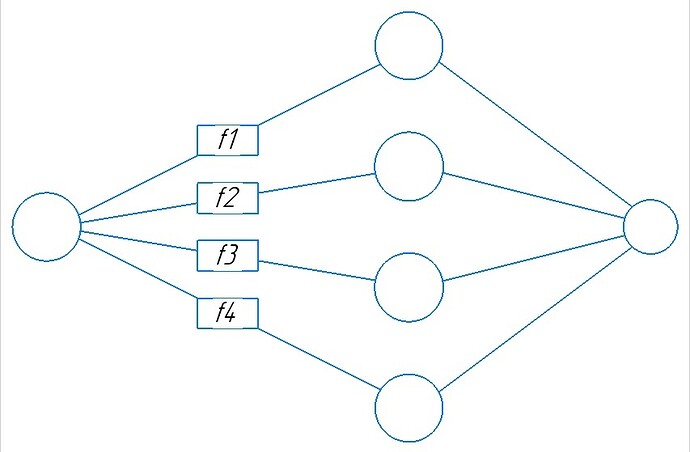

Hello! How can I add functions, different for every neuron, between two layers of fully connected NN? Is it possible?

class PolyNet(torch.nn.Module):

def __init__(self):

super(PolyNet, self).__init__()

self.fc1 = torch.nn.Linear(1, 4, bias=False)

# something here?

self.fc2 = torch.nn.Linear(4, 1, bias=False)

def forward(self, x):

x = self.fc1(x)

# or mb here?

x = self.fc2(x)

return x

Yes, you can freely manipulate the intermediate activation x (output of fc1) in your forward method before passing it to the next layer.

Thanks, but how to apply function to specific neuron? Would you mind to give me example in code please

I assume “neuron” means a specific output activation in this use case.

If so, you can directly apply the method on it and concatenate the results afterwards.

Depending on your actual use case, something like this would work:

class PolyNet(torch.nn.Module):

def __init__(self):

super(PolyNet, self).__init__()

self.fc1 = torch.nn.Linear(1, 4, bias=False)

self.fc2 = torch.nn.Linear(4, 1, bias=False)

def forward(self, x):

x = self.fc1(x)

print("DEBUG: before\n{}".format(x))

# apply method on "neuron2"

x_tmp = x[:, 2] # index activation

x_tmp = x_tmp * 1000. # apply op on neuron

x = torch.cat((x[:, :2], x_tmp[:, None], x[:, 3:]), dim=1) # concatenate

print("DEBUG: after\n{}".format(x))

x = self.fc2(x)

return x

model = PolyNet()

x = torch.randn(2, 1)

out = model(x)

# DEBUG: before

# tensor([[ 0.6320, -0.1368, 0.3954, 0.0055],

# [-0.3955, 0.0856, -0.2474, -0.0034]], grad_fn=<MmBackward0>)

# DEBUG: after

# tensor([[ 6.3205e-01, -1.3676e-01, 3.9545e+02, 5.4939e-03],

# [-3.9545e-01, 8.5570e-02, -2.4742e+02, -3.4374e-03]],

# grad_fn=<CatBackward0>)

1 Like