I would like to assign the fixed random element to weight for each connection in neural network. lets say there are only two values available for weight to select from instead to decreasing it constantly. how can i assign those two values ?? any idea?? @ptrblck

You can directly assign any tensor to the trainable parameters by wrapping the assignment into a no_grad() guard as seen here:

lin = nn.Linear(10, 10)

with torch.no_grad():

lin.weight.copy_(torch.ones(10, 10))

lin.bias.copy_(torch.ones(10))

Note that I’ve used torch.ones as a simple example, but you can use your “fixed” values.

I’m not sure if I understand the general use case correctly, but I assume you don’t want to “train” the model and allowing it to pick only your fixed values as I wouldn’t know how this should work.

Thanks for your response. Let me explain you in better way. I am using 2-layered convolutional neural network to classify Cifar-10. Algorithm needs to assign K number of fixed values to weight per connection. these values are sampled through glorot/xavier uniform distribution. the shape of my first layer looks like [6,3,5,5]. so, we have 450 weights for this layer only. but since we need to assign K number of fixed values per weight (connection) then we can have 450*K weights in total for the first layer. Now each weight is associated with Score function which is updated through backpropagation and only these scores are updated not weights directly. when scores are updated then looking at the scores associated with each weight, we select one weight per connection and thus it is manually assigned to the network, and we test the network using test samples. in summary, we select weights for each connection from the fixed set of numbers only instead to reducing it continuously. for your reference i am attaching the research whose code i am trying to implement. it would be great if you can give me any idea how i can achieve the desired result. [2101.06475] Slot Machines: Discovering Winning Combinations of Random Weights in Neural Networks

Thanks. i would be really greatly if i can get any hint.

I would assume that my example code snippet should work for the actual assignment.

I’m unsure how the scoring and selection works, but I assume your general workflow might be:

- create the scores

- select the corresponding “discrete” values based on the scores

- create the tensor which should be assigned to the parameter

- assign the tensor

- test the new model with your validation data

during training process (forward and backward pass), how can i assign two values to a single weight for optimization process? since each connection have two choices for weight hence while forward and backward pass these values should be placed one by one in place of weight. so, if we have 100 parameters then (100*100) possible weight combination should be tested during training(weight optimization) any idea???

I’m not sure I understand the question correctly, but you cannot assign multiple values to a single scalar.

My previous code snippet shows how to assign a tensor to a parameter.

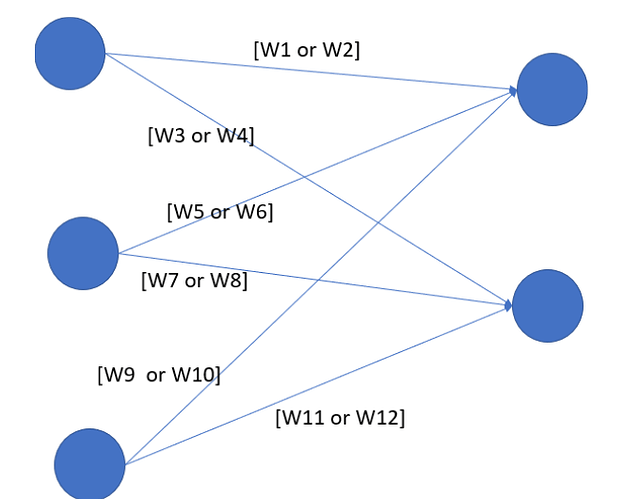

I need to optimize fully connected 2 layered neural network which has only two choices per weight which are manually assigned as shown in the picture. how can i optimize this sort of network? i hope this picture made it clear to you. during forward pass i would like to give both the values one by one (eg, w1 and w2) to optimize network and in the end i have to select one. my question is how and where should i declare these values so that it can be considered during optimization process. do i need to make my custom optimizer? or create any sort of loop?

Yes, the “optimization” would need a custom logic, since the default optimizers in PyTorch update parameters based on gradients, while your approach needs a “selection” algorithm, which you would need to implement manually (at least I’m not aware of any library or automatic way to do this kind of optimization for you).