I wanted to build a network that takes 1D features and 2D features and provides them as input in the respective networks (lets says one network with 1d conv and another with 2dconv) but then they are finally combined to give one output. I do not know how to implement it, can you guys give me some ideas?

Thanks in advance

Assuming you have created both models, which accept the features with different dimensions, you could concatenate their outputs if their shapes allow to do so.

Here is a simple example:

class ModelA(nn.Module):

def __init__(self):

super().__init__()

self.conv = nn.Conv1d(1, 1, 1)

self.lin = nn.Linear(10, 10)

def forward(self, x):

x = self.conv(x)

x = x.view(x.size(0), -1)

x = self.lin(x)

return x

class ModelB(nn.Module):

def __init__(self):

super().__init__()

self.conv = nn.Conv2d(1, 1, 1)

self.lin = nn.Linear(10, 10)

def forward(self, x):

x = self.conv(x)

x = x.view(x.size(0), -1)

x = self.lin(x)

return x

modelA = ModelA()

modelB = ModelB()

xA = torch.randn(1, 1, 10)

xB = torch.randn(1, 1, 5, 2)

outA = modelA(xA)

outB = modelB(xB)

out = torch.cat((outA, outB), dim=1)

print(out.shape)

> torch.Size([1, 20])

Hi,

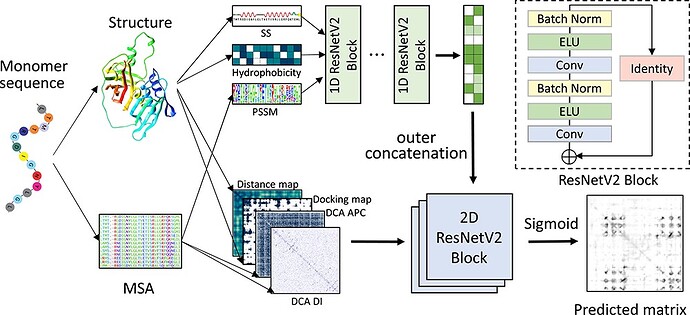

Thanks a lot for your help. But I think my question was a little misleading in the last part. What I meant was that a network that 2 inputs in 2 different dimensions and produces one result. I did not mean simple concatenation. I have attached a picture for reference, its from a paper. Sorry for misleading question

I’m not familiar with this figure/model, but it seems that features are packed via an “outer concatenation”? Could you describe how it was done, if not through concatenating tensors, please?

I am also not very aware of what they did and also I am fairly new to this. But here is an idea of what they did. They extracted 1d feature as suggested on the top part of the image and also extracted the 2d features from the data (feature generation can be done in many way so not a problem). But the intriguing part for me is how these 1d and 2d features can be combined to train one single network (here in this case 2D resnetv2 ) as presented in this picture.

Sincerely

Raj