Hi all,

I’m not an expert of Pytorch and I’m trying to figure out how I can build this specific type of Neural Network Architecture.

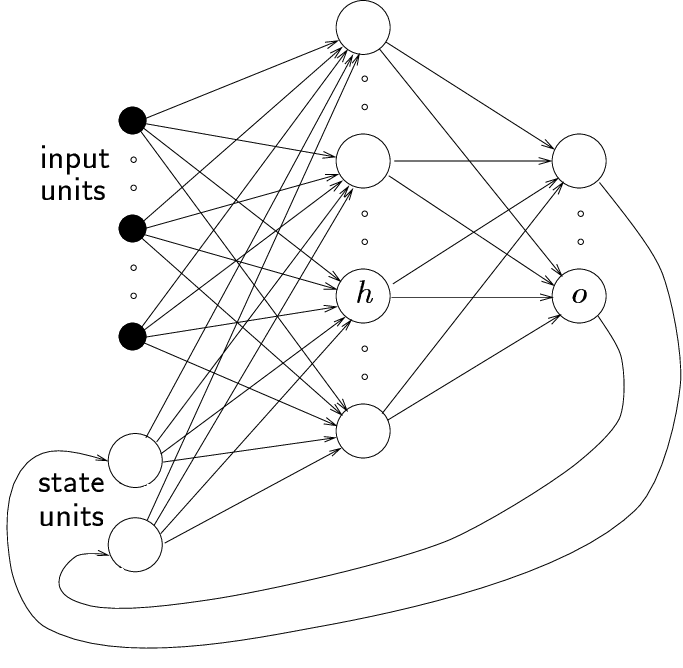

It can be identified as a Jordan Network, or maybe even as a Recursive Neural Network.

So, as you can see, as input layer we have an input vector and a state vector (concatenated) that is actually taken from the NN output of the previous time step. This means that for training I should provide only the starting state Xo and then a time series of inputs and the related time series of output to compare the predicitons.

I would like it to be of arbitrary depth, as arbitrary should be the number of time steps to consider.

The loss should be computed as a sum of the errors of each time step.

So, concerning the training, is an RNN composed by a hidden number of multiple dense layers and a very specific state vector form, while, once trainded, for inference I will use it as a simple forward network.

I don’t understand if can I use the Pytorch implementations of RNN, because in there the “state” is not of a specific form imposed by me, but depends on the NN architecture, and I cannot build a multilayer dense NN hidden inside the recurrence that in my case will happen only from output to input.

Considering that there’s no “state” passing from time step to time step, but only a feedback of the output as input I don’t know if I can define a simple forward network and then during the training run it several times (once for each time step) considering the complete time series for the loss function.

Thank you for your help.