I have a network which has one linear layer. I made one forward pass then how to check if weight of that linear layer is in computational graph? As in tensor .grad_fn None means the tensor is not in computation graph, but mod.linear.weight.grad_fn is None even after forward pass @ptrblck

Hi @shivam_singh,

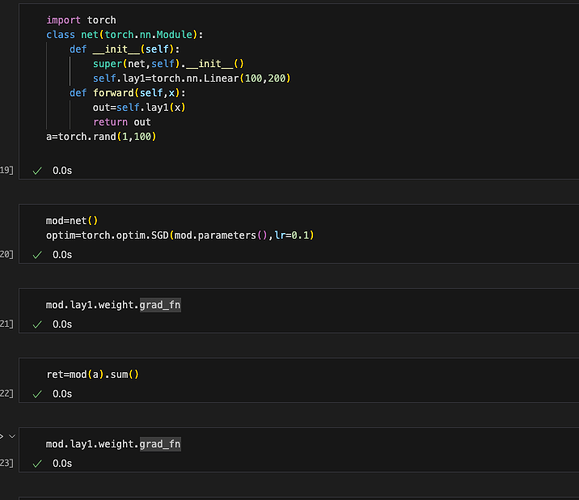

In order to populate the .grad attribute of a weight, you need to first compute some loss value using your model, i.e.,

x = torch.randn(4,100) #batch_size=4

y = net(x) #compute output

loss = torch.mean(y) #take mean over all output/batch

loss.backward() #compute gradients (and populate .grad attributes)

Then see if the gradient is in self.lay1,

print(mod.lay1.weight.grad)

The particular grad_fn the Tensor will used is determined in the backward pass of your model and it is initialized as None.

1 Like

In addition to @AlphaBetaGamma96’s answer: parameters are leaf variables and will thus not get a grad_fn assigned to them since they were explicitly created. Intermediate tensors will set their grad_fn during the computation graph creation.

1 Like