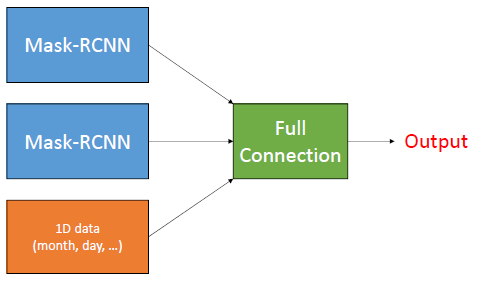

I would like to make the architecture using detectron2 like attached image, but I don’t know how to do that. Could anyone tell me about that?

The image doesn’t give much information.

Would you like to flatten all outputs of the models and concatenate it with the 1D data tensor before feeding it into the fully-connected layer?

Also, which predictions from the MaskRCNN models would you like to use?

I would like to use date and time data, not just images data, as input data.

I would like to do semantic or instance segmentation. The output is also like that (detected or not in each pixel).

Is it possible?

I don’t care the network in detail.

This seems to be the most important detail in order to give you some ideas. ![]()

Generally, it’s possible to combine multiple inputs, but without a proper description, I cannot give you examples.

E.g. your MaskRCNN models would already give you the predictions, while the image seems to feed (some) predictions with an additional input to a fully-connected layer. What should this fully-connected layer return?

I assume that the outputs of MaskRCNN models are the probability of the object on each pixel, and this fully-connected layer also return the probability on each pixel (2D).