Dear community,

I need to compute the (differentiable) Jacobian matrix of y = f(z), where y is a (B, 3, 128, 128) aka a batch of images, and z is a (B, 64) vector.

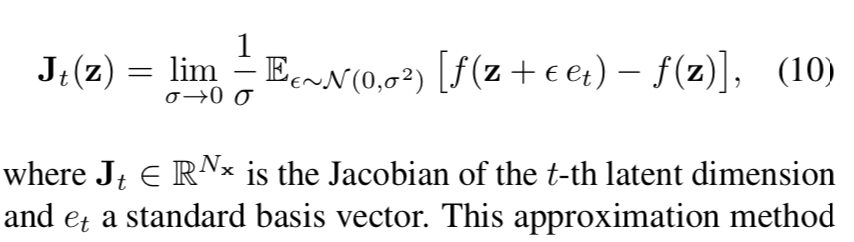

Computing the Jacobian matrix through the pytorch functionial jacobian (Automatic differentiation package - torch.autograd — PyTorch 2.1 documentation) Is too slow. Thus, I am exploring the finite difference method (Finite difference - Wikipedia), which is an approximation of the Jacobian. My implementation for B=1 is:

def get_jacobian(net, z, x):

eps = torch.rand((x.size(0), ), device=x.device)

delta = torch.sqrt(eps)

x = x.view(x.size(0), -1)

m = x.size(1)

n = z.size(1)

J = torch.zeros((m, n), device=x.device)

I = torch.eye(n, device=x.device)

for j in range(n):

J[:, j] = (net(z+delta*I[:, j]).view(x.size(0), -1) - x) / delta

return J

x_fake = mynetfunction(z)

J = get_jacobian(mynetfunction, z, x_fake)

However, it requires a lot of memory. Is there a way to make it better?