I run the following program:

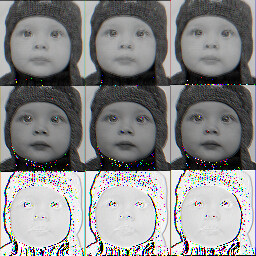

read a picture of 3 channel input torch::nn::conv2d(3,3,3).pad(1).stride(1),then I got the results:

code:

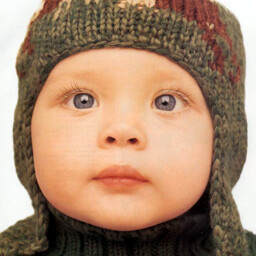

cv::Mat img = cv::imread("babyx2.png", 1);

torch::Tensor img_tensor = torch::from_blob(img.data, { img.rows, img.cols, 3 }, torch::kByte);

img_tensor = img_tensor.permute({ 2, 0, 1 });

img_tensor = img_tensor.unsqueeze(0);

img_tensor = img_tensor.to(kFloat32);

torch::Tensor result = C1(img_tensor); //C1: torch::nn::Conv2d(torch::nn::Conv2dOptions(3, 3, 5).padding(1))

.....then get the result use following method

auto ToCvImage(at::Tensor tensor)

{

int width = tensor.sizes()[0];

int height = tensor.sizes()[1];

//auto sizes = tensor.sizes();

try

{

cv::Mat output_mat(cv::Size{ height, width }, CV_8UC3, tensor.data_ptr<uchar>());

return output_mat.clone();

}

catch (const c10::Error& e)

{

std::cout << "an error has occured : " << e.msg() << std::endl;

}

return cv::Mat(height, width, CV_8UC3);

}

what happen???I don’t think the result picture is right,how to do…help