Hello everyone,

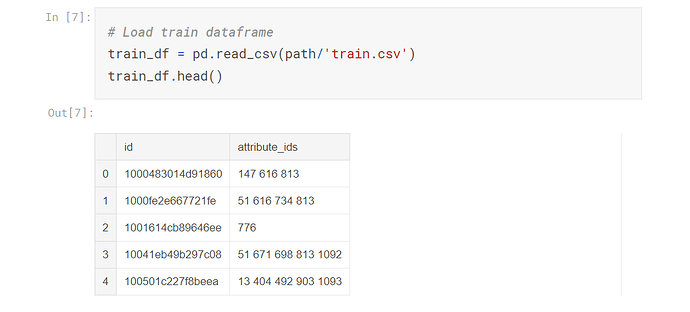

I am interested in creating a custom multilabel dataset class. I am reading the data from a csv file.

class MyDataset(Dataset):

def __init__(self, df_data, data_dir = './', img_ext='.png', transform=None):

super().__init__()

self.df = df_data.values

self.data_dir = data_dir

self.img_ext = img_ext

self.transform = transform

def __len__(self):

return len(self.df)

def __getitem__(self, index):

img_name,label = self.df[index]

img_path = os.path.join(self.data_dir, img_name+self.img_ext)

image = cv2.imread(img_path)

if self.transform is not None:

image = self.transform(image)

return image, label

How can this be implemented?

If you would like to use nn.BCEWithLogitsLoss (or nn.BCELoss) for the multi-label classification task, you would have to create a multi-hot encoded target.

Assuming label contains the class indices, you could use the following code:

target = torch.zeros(nb_classes)

target[label] = 1.

1 Like

The code threw an error in the training function.

TypeError: new(): invalid data type 'str’

nb_classes = (list(range(1103)))

class MyDataset(Dataset):

def __init__(self, df_data, data_dir = './', img_ext='.png', transform=None):

super().__init__()

self.df = df_data.values

self.data_dir = data_dir

self.img_ext = img_ext

self.transform = transform

def __len__(self):

return len(self.df)

def __getitem__(self, index):

img_name,label = self.df[index]

img_path = os.path.join(self.data_dir, img_name+self.img_ext)

image = cv2.imread(img_path)

target = torch.zeros(nb_classes)

target[label] = 1.

if self.transform is not None:

image = self.transform(image)

return image, target

Training function

total_step = len(loader_train)

for epoch in range(num_epochs):

for i, (images, labels) in enumerate(loader_train):

images, labels = Variable(images), Variable(labels)

images = images.to(device)

labels = labels.to(device)

# Forward pass

outputs = resNet(images)

loss = criterion(outputs, labels)

# Backward and optimize

optimizer.zero_grad()

loss.backward()

optimizer.step()

if (i+1) % 100 == 0:

print ('Epoch [{}/{}], Step [{}/{}], Loss: {:.4f}'

.format(epoch+1, num_epochs, i+1, total_step, loss.item()))

nb_classes should be the number of classes given as an int.

Could you change that and try to run your code again?

My mistake there. I thought nb_classes was a list of the classes indices.

nb_classes = 1103

However, the training function threw another error.

IndexError: too many indices for tensor of dimension 1

---------------------------------------------------------------------------

IndexError Traceback (most recent call last)

<ipython-input-77-d05ecce66b45> in <module>()

1 total_step = len(loader_train)

2 for epoch in range(num_epochs):

----> 3 for i, (images, labels) in enumerate(loader_train):

4 images, labels = Variable(data), Variable(target)

5

/opt/conda/lib/python3.6/site-packages/torch/utils/data/dataloader.py in __next__(self)

613 if self.num_workers == 0: # same-process loading

614 indices = next(self.sample_iter) # may raise StopIteration

--> 615 batch = self.collate_fn([self.dataset[i] for i in indices])

616 if self.pin_memory:

617 batch = pin_memory_batch(batch)

/opt/conda/lib/python3.6/site-packages/torch/utils/data/dataloader.py in <listcomp>(.0)

613 if self.num_workers == 0: # same-process loading

614 indices = next(self.sample_iter) # may raise StopIteration

--> 615 batch = self.collate_fn([self.dataset[i] for i in indices])

616 if self.pin_memory:

617 batch = pin_memory_batch(batch)

<ipython-input-69-ac5ff07bb12a> in __getitem__(self, index)

17 #label = list(map(int, label.split(' ')))

18 target = torch.zeros(nb_classes)

---> 19 target[label] = 1.

20 if self.transform is not None:

21 image = self.transform(image)

IndexError: too many indices for tensor of dimension 1

How did you create label?

Here is a small dummy example how I suggested it should work:

nb_classes = 5

label = [0, 3]

target = torch.zeros(nb_classes)

target[label] = 1

1 Like

Oh I know how it works!

I convert each label to an int array of class indices.

def __getitem__(self, index):

img_name,label = self.df[index]

img_path = os.path.join(self.data_dir, img_name+self.img_ext)

image = cv2.imread(img_path)

label = list(map(int, label.split(' '))) #'1 40 56 813' --> [1, 40, 56, 813]

target = torch.zeros(nb_classes)

target[label] = 1.

if self.transform is not None:

image = self.transform(image)

return image, target

Thanks!