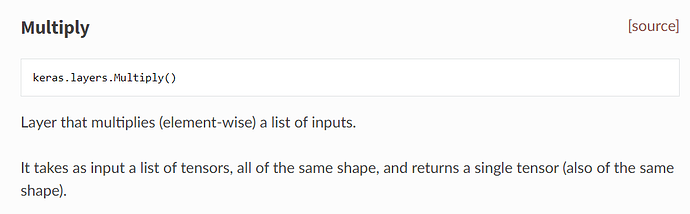

I am trying to find a function which could help me to element-wise product two feature map, and supports backprop at the meantime, like the layer in keras(

Hi,

PyTorch uses a semantic called autograd to handle backward operations automatically. So the only thing you need to take care of is the forward pass of your custom layer.

First you define a class that extends torch.nn.Module:

class Multiply(nn.Module):

def __init__(self):

super(Multiply, self).__init__()

def forward(self, tensors):

result = torch.ones(tensors[0].size())

for t in tensors:

result *= t

return t

And your input must support grad, you can use below snippet:

x = torch.randn(3, 2, 2)

x.requires_grad = True # this makes sure the input `x` will support grad ops

Now, whenever you want, you can call backward on any tensors that passed through this layer or the output of this layer itself to calculate grads for you.

The below code just uses the output to calculate grad of the multiplied layers:

multiply = Multiply()

y = multiply([x, x])

torch.sum(y).backward()

And the reason I used torch.sum is that backward only is possible on scalar outputs.

Here is the official tutorial of creating custom layers, it will help for sure.

Bests,

Nik

If I use torch.multiply will the backpropagation be handled correctly? I am currently using it to multiply two variables (output of convolutions) inside the forward method of a custom module (extends nn.Module).