hi, i am trying to study ALBERT, and i have question, how to cross-layer parameters sharing?

my code like this

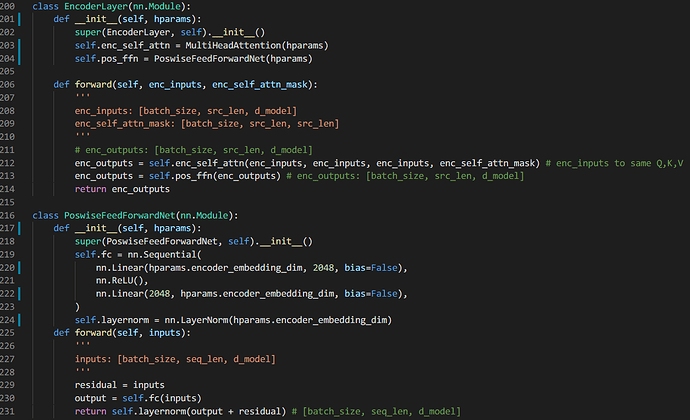

class Encoder(nn.Module):

def __init__(self, hparams):

super(Encoder, self).__init__()

self.encoder_layer = EncoderLayer(hparams)

def forward(self, enc_inputs):

for i in range(11):

# enc_outputs: [batch_size, src_len, d_model]

enc_inputs = self.encoder_layer(enc_inputs, enc_self_attn_mask)

return enc_inputs

i am not sure parameters of enc_self_attn and pos_ffn are shared in each loop

thanks for you answer very much