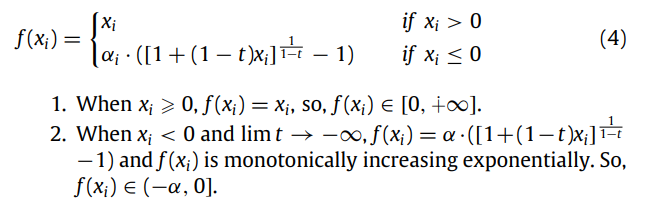

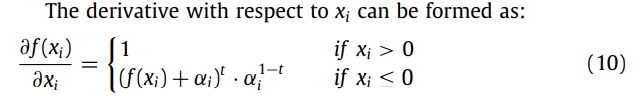

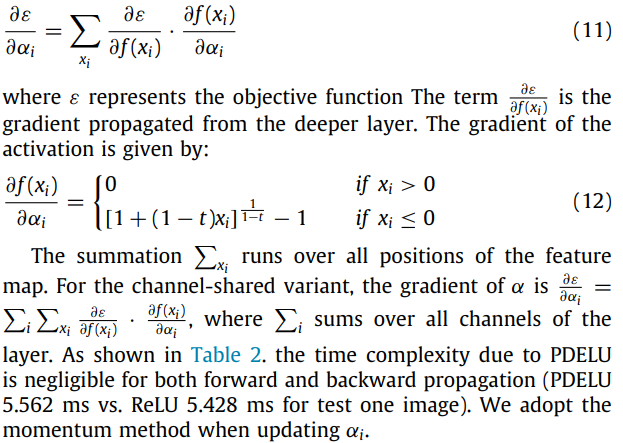

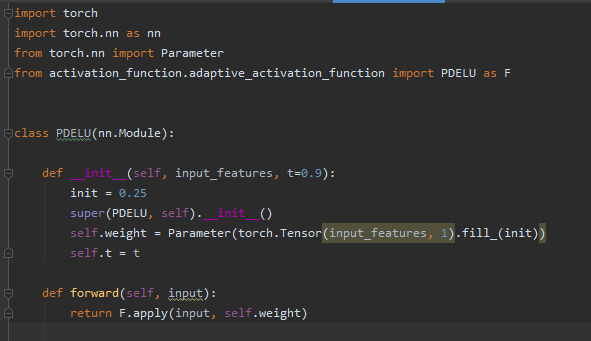

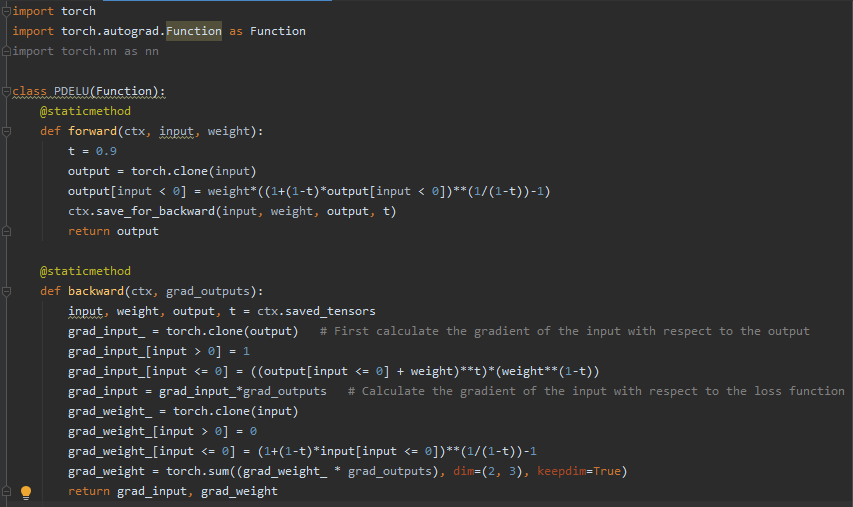

Hi,I’m implementing on a paper. It designs a new activation function called parametric deformable exponential linear units (PDELU).I try to implement such an activation function in pytorch, but I am not very clear about the latitude of the input data(B,C,H,W or C, H, W or H,W). when pytorch calls the class I defined (PDELU), I perform the forward calculation incorrectly, because the input and weight here are inconsistent.

In addition, I want each input channel to correspond to a parameter of the activation function, but the slicing operation seems to be incorrect. Below, I provide the formula of this activation function and the corresponding gradient expression, as well as my own code. If you can, can you implement a correct version for me? Thank you.