I am finding a way to treat high-dim sparse tensors.

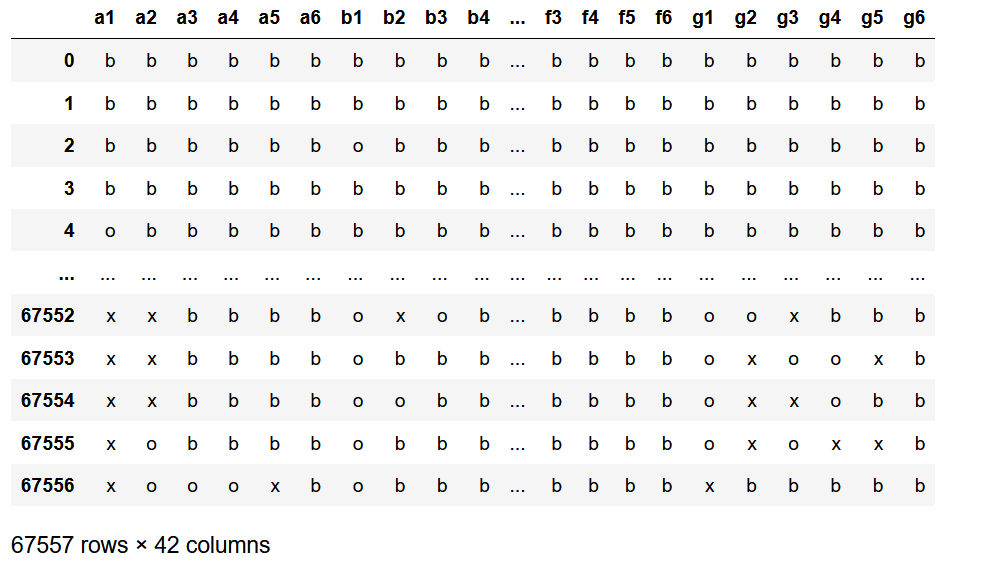

I downloaded this data from the UCI dataset. It is a tabular dataset with a size of 67557 × 42. Each row can take a categorical quantity (o, ×, b).

import numpy as np

import torch

import pandas as pd

from ucimlrepo import fetch_ucirepo

dataset = fetch_ucirepo(id=26)

X = dataset.data.features

X

I want to convert this data into a sparse tensor as follows. Firstly, I replace (o, ×, b) with integers (0,1,2).

X = X.astype('category')

cat_columns = X.select_dtypes(['category']).columns

X[cat_columns] = X[cat_columns].apply(lambda x: x.cat.codes)

X

Now, X is an integer matrix. Then, I am trying to convert it into 42-dim sparse tensor.

Each row of the table can be regarded as a 42-dim coordinate where the tensor has 1 value. The tensor values in other indices are 0.

X_np = np.transpose(X.to_numpy())

(D, N) = np.shape(X_np)

X_np = X_np.astype(np.int32)

X_th = torch.from_numpy(X_np)

X_torch = torch.sparse_coo_tensor(X_th, np.ones(N) )

However, I got the following error:

RuntimeError

Traceback (most recent call last)

in

----> 1 X_torch = torch.sparse_coo_tensor(X_th, np.ones(N) )

RuntimeError: numel: integer multiplication overflow

When the number of features of table data is less, I can use torch.sparse_coo_tensor in this way. For example, just changing fetch_ucirepo(id=26) into fetch_ucirepo(id=101) (TicTacToe dataset), it works.

I am just confused because, essentially, the data quantity of a sparse tensor is not huge, even if it is highly dimensional. So, why does this method not work? What is the alternative to treating high-dimensional sparse tensors?

Note: The use of the UCI dataset above is for illustrative purposes, not because I want to turn table data into a tensor. I am looking for a way to handle high-dimensional sparse tensors in Torch.