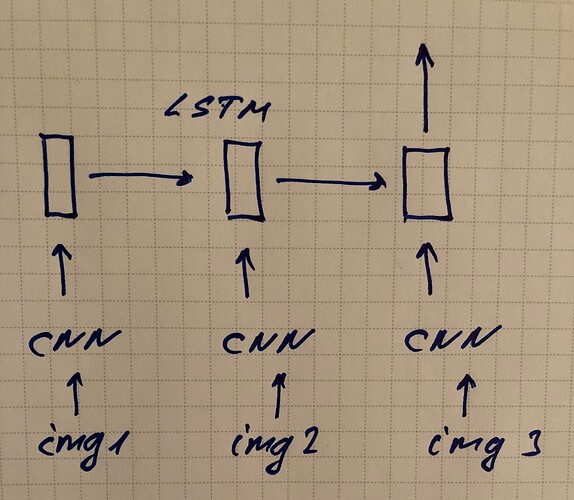

What is the right way to define and train in batches simple LSTM (many to one) with CNN inputs?

NOTE: train both networks LSTM and CNN

1 Like

I’ve neber tried it, and I’m probably a bit naive, but should this pseudo code work:

cnn = MyCnnModel()

lstm = MyLstmModel()

# Go through each image sequence in the dataset

for img_seq_batch in dataset:

# Initialize hidden state of LSTM

hidden = init_hidden()

output, hidden = forward(img_seq_batch, hidden)

# Push last hidden state trough additional layers

# Calculate loss

# Backprop

def forward(img_seq_batch, hidden):

# Loop over each image in the sequences

for img_batch in img_seq_batch:

# Push batch of images through CNN

encoded = cnn(img_batch)

# Push CNN output through LSTM (just one time step)

output, hidden = lstm(encoded, hidden)

# Return the last output/hidden of the LSTM

return output, hidden

2 Likes

Yes, it should work and it was my first idea.

The question is can we skip this loop and do in one transaction? If no, then I accept this answer.

Hm, not sure why the loop would be a problem. The heaving lifting is still done under the hood by PyTorch. I would also assume that when you give a sequence the nn.LSTM that internally it also just loops over each item.

Maybe another alternative – again, I haven’t tried it:

- Treat a sequence of images as a batch of images and push it through the CNN

- Treat the batch of encoded images again as a sequence and push it in one go through the LSTM (no loop required)

Not idea how to extend it to support batches of images sequences, or if it possible at all.

Thanks, I did in this way. It works fine.