Hello all,

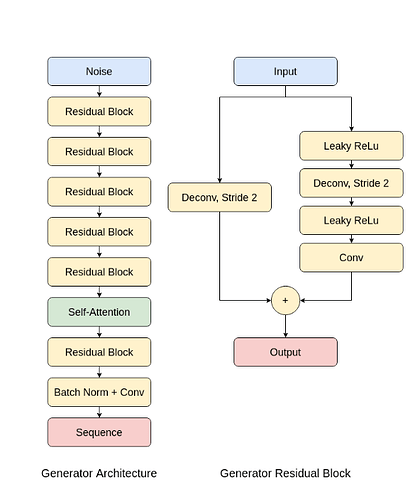

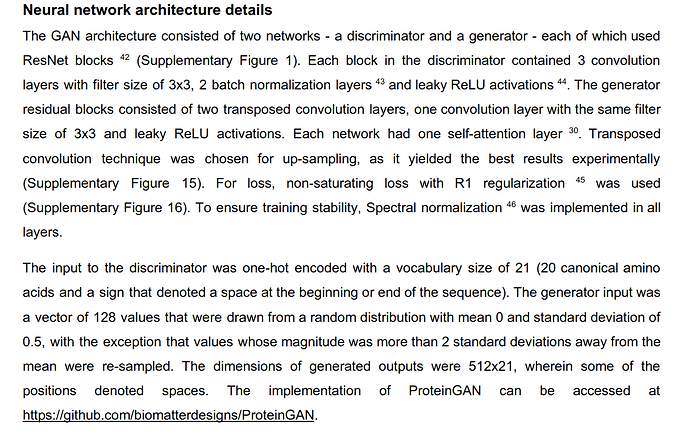

I am working on a ProteinGAN implementation in PyTorch. The generator model has an input of [batch_size, z_dim] and the output is [batch_size, 512, 21]. The model structure is like this;

Based on that I have implemented the following code, but i when I am running it the following output size is coming. I tried passing stride=(2, 1) so the width size will not increase. But I am getting this error;

RuntimeError: output padding must be smaller than either stride or dilation, but got output_padding_height: 1 output_padding_width: 1 stride_height: 2 stride_width: 1 dilation_height: 1 dilation_width: 1

Output:

torch.Size([1, 512, 16, 16])

torch.Size([1, 256, 32, 32])

torch.Size([1, 128, 64, 64])

torch.Size([1, 64, 128, 128])

torch.Size([1, 32, 256, 256])

torch.Size([1, 16, 512, 512])

torch.Size([1, 21, 512, 512]) # it suppose to be [21, 512]

Code;

import torch

import torch.nn as nn

import torch.nn.functional as F

class ResidualBlock(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size=3, stride=2, sn_itrs=1) -> None:

super().__init__()

self.deconv1 = nn.ConvTranspose2d(

in_channels,

out_channels,

kernel_size,

stride=stride,

output_padding=1,

padding=1

)

self.deconv2 = nn.ConvTranspose2d(

in_channels,

in_channels,

kernel_size,

stride=stride,

output_padding=1,

padding=1

)

self.conv = nn.Conv2d(

in_channels,

out_channels,

kernel_size,

stride=1,

padding=1

)

def shortcut(self, x):

return self.deconv1(x)

def residual(self, x):

x = self.deconv2(F.leaky_relu(x))

x = self.conv(F.leaky_relu(x))

return x

def forward(self, x):

out = self.shortcut(x) + self.residual(x)

print(out.shape)

return out

class Generator(nn.Module):

def __init__(self, cfg) -> None:

super().__init__()

self.height = cfg.HEIGHT

self.width = cfg.WIDTH

self.hidden_dim = cfg.GF_DIM * (2 ** (len(cfg.GENERATOR_LAYERS) - 1))

self.linear = nn.Linear(cfg.Z_DIM, self.height * self.width * self.hidden_dim)

self.residuals = nn.Sequential(

*self.make_layers(cfg.GENERATOR_LAYERS)

)

self.batch_norm = nn.BatchNorm2d(num_features=self.hidden_dim // 64)

self.conv = nn.Conv2d(self.hidden_dim // 64, cfg.VOCAB_SIZE, 3, padding=1)

def make_layers(self, layer_config):

layers = []

in_channels = self.height * self.width * self.hidden_dim

for x in layer_config:

out_channels = in_channels // 2

if x == "A":

pass

else:

layers.append(ResidualBlock(in_channels, out_channels))

in_channels = out_channels

return layers

def forward(self, x):

x = self.linear(x)

x = x.view((-1, self.hidden_dim, self.height, self.width))

x = self.residuals(x)

x = F.leaky_relu(self.batch_norm(x))

x = torch.tanh(self.conv(x))

return x

class cfg:

def __init__(self):

self.VOCAB_SIZE = 21

self.GENERATOR_LAYERS = ["R", "R", "R", "R", "R", "R"] # I am no using attention at the moment

self.HEIGHT = 8

self.WIDTH = 8

self.Z_DIM = 128

self.GF_DIM = 32

if __name__ == "__main__":

gen = Generator(cfg())

print(gen(torch.rand(1, 128)).shape)