Hello!

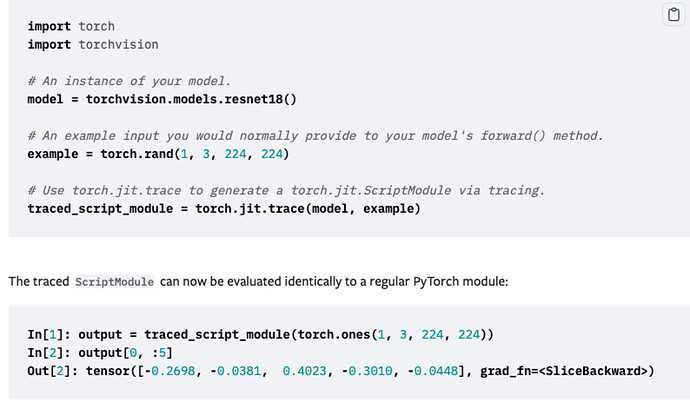

Following the official tutorial, I am trying to convert a torch modle to a torch script. I am worried about how to ensure the correctness of the torch script. When convert a pytorch model to torch script via tracing(resnet18 as an example), official code shows to use a example input torch.rand(1,3,224,224) to get a traced_script_module. And then, feed the module a torch.ones(1,3,224,224) to get a output tensor. But I get different outputs when feed same torch.ones(1,3,224,224). Just because the example torch.rand(1,3,224,224)s are different. How to ensure that the results are correct, when feed real images.

thanks.

I cannot reproduce the issue using resnet18 as seen here:

model = models.resnet18()

x = torch.randn(1, 3, 224, 224)

model_traced = torch.jit.trace(model, x)

out = model(torch.ones(1, 3, 224, 224))

out_traced = model(torch.ones(1, 3, 224, 224))

print((out - out_traced).abs().max())

> tensor(0., grad_fn=<MaxBackward1>)

In case you are using a different model, make sure the traced model uses the same “code path” as the eager model (e.g. in case data-dependent control flow is used inside the model or dropout etc.).

EDIT: the tracer should also raise a warning e.g. if dropout was used in training mode:

TracerWarning: Trace had nondeterministic nodes. Did you forget call .eval() on your model?

Thanks for your attention.

I can reproduce this issue by run code twice.

model = torchvision.models.resnet18()

# An example input you would normally provide to your model's forward() method.

example = torch.rand(1, 3, 224, 224)

# Use torch.jit.trace to generate a torch.jit.ScriptModule via tracing.

traced_script_module = torch.jit.trace(model, example)

output = traced_script_module.forward(torch.ones(1,3,224,224))

traced_script_module.save("traced_resnet_model.pt")

#eg: first get: tensor([-0.0645, 0.1313, -0.3563, -0.0869, 0.3209], grad_fn=<SliceBackward>)

# second get: tensor([-0.1508, -0.2974, 0.0963, -0.1596, -0.7377], grad_fn=<SliceBackward>)

print(output[0, :5])

Basing code above,i run it first get tensor [-0.0645, 0.1313, -0.3563, -0.0869, 0.3209], and i try to run it again, i will get tensor[-0.1508, -0.2974, 0.0963, -0.1596, -0.7377]. The only difference between two procedures are var example. it seems to generate diffenent traced script. Besides, i compared to binary traced_resnet_model.pts, they are absolutly different,as shown below.

>> cmp traced_resnet_model_1.pt traced_resnet_model_2.pt

>> raced_resnet_model_1.pt traced_resnet_model_2.pt differ: char 51, line 1

I don’t quite understand your posted code and the explanations, since the code doesn’t compare anything or do you mean you are getting different results by rerunning the entire script twice?

In the latter case, this would be expected, as you are randomly initializing the resnet18. If you want to load the pretrained model, use pretrained=True, or load a custom state_dict otherwise.

Wow, that’s it.

I understand it. When I use the pre-trained model, the trace script becomes unique. and generate same result no matter how many times i run it. thank you for your patience!