I am using pytorch 1.2 and use the following code to estimate the available gpu memory:

h = nvmlDeviceGetHandleByIndex(0)

info = nvmlDeviceGetMemoryInfo(h)

#print(f’total : {info.total}’)

print(f’free : {info.free}’)

#print(f’used : {info.used}’)

print(‘torch cached: {}’.format(torch.cuda.memory_cached(0)))

print(‘torch allocated: {}’.format(torch.cuda.memory_allocated(0)))

remaining = info.free + (torch.cuda.memory_cached(0) - torch.cuda.memory_allocated(0))

in summary, I use free + (cached - allocated) as the available gpu memory for further allocating tensors

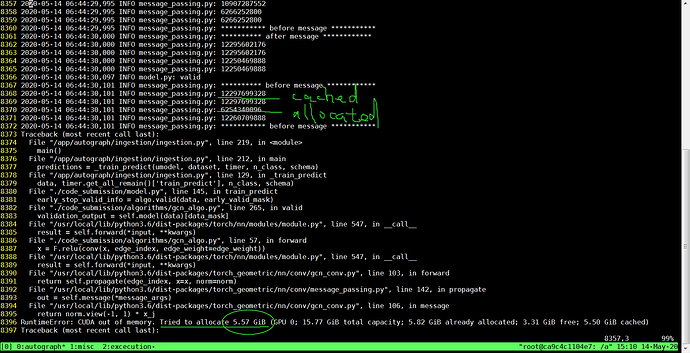

However, I encountered an error:

RuntimeError: CUDA out of memory. Tried to allocate 5.57 GiB (GPU 0; 15.77 GiB total capacity; 5.82 GiB already allocated; 3.31 GiB free; 5.50 GiB cached)

I checked the code here: https://github.com/pytorch/pytorch/blob/54a63e0420c2810930d848842142a07071204c06/c10/cuda/CUDACachingAllocator.cpp#L244

It seems that “cached” as the error info pointed is stats.amount_cached - stats.amount_allocated

In this sense, this part can be used and free (3.31G) + cached (5.50G) is larger than what I am going to allocate (5.57G).

So what’s the reason? I guess I misunderstand some mechanisms. Could you give me a help? Thanks!