Hi, I use torch.autograd.profiler to record the training time of one batch (size = 32) on AlexNet.

with torch.autograd.profiler.profile(use_cuda=True) as prof:

output = AlexNet(data)

loss = criterion(output, target)

loss.backward()

optimizer.step()

print(prof)

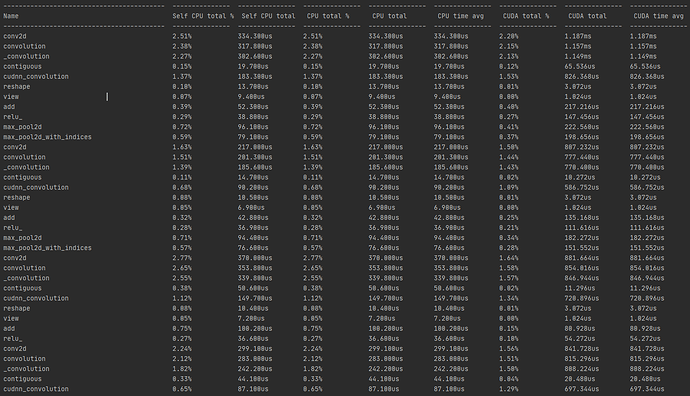

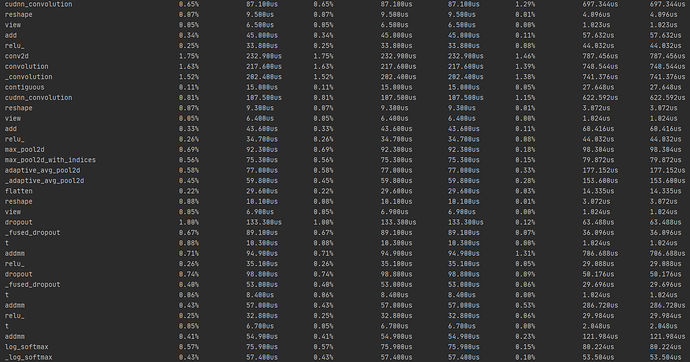

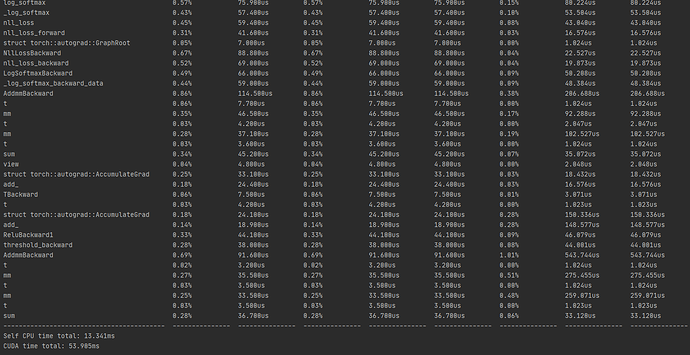

The result is shown below

I can explain the forward time, like (conv2d, convolution, _convolution, contiguous, cudnn_convolution, reshape, view, add belongs to the forward time of Conv_1 in the AlexNet.). I can relate the records to each layer when forward propagation. However, after nll_loss_forward, I don’t understand each name of the records. I think there are eight layers needed to update the weight (five conv layers and three fully-connected layers). Can anyone explain the backward propagation related to the records? For example, which records are related to the last fully-connected layer. I want to know the backward propagation time of each layer.

Thanks for any suggestion.