Hi, I have pre-trained network on my own. It has 2 out_features in last fully connected layer. I will load this network and want to add the one node in last fully connected layer. Then It will have 3 out_features in last fully connected layer. It is quite similar to transfer learning. Exactly I do not want to initialize the last layer. How can I do this one?

Hi,

What do you mean by not initializing the last layer? How this would be different from transfer learning?

You can do like this:

class SubNet(nn.Module):

def __init__(self):

super().__init__()

self.net = Network()

self.last_linear = nn.Linear(2,1)

def forward(self, x):

y1, y2 = self.net(x)

return y1, y2, self.last_linear(y2)

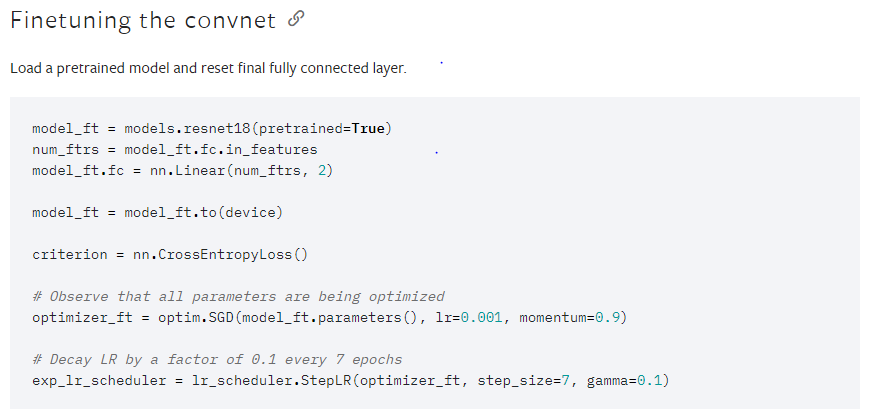

From the PyTorch transfer learning documents, that is Imagenet 1000 case. The writer said that reset the final fully connected layer. That means 1000 class outputs change to 2 class output and initialized. Actually I want to do something similar. I want to change the last 2 class output to 3 class output layer. Not 2class to 1 class or adding the one more layer.

please could you check my code here. it is similar to the question above and I solved through my code