Hey, I’d like to know how can I draw/extract a model graph. I know that computation graph can give me some graph-like results, but each nodes can only be some tensor output by a specific differentible operation

In my case, I want the node of the graph to be the layer of the model instead of a tensor. Which means I just want to know the flow of the tensor from some layer to some other layer.

for example for a model defined like below:

class MyLayer(torch.nn.Module):

def __init__(self, in_dim, out_dim):

super().__init__()

self.linear = torch.nn.Linear(in_dim, out_dim)

self.relu = torch.nn.ReLU()

def forward(x):

return self.relu(self.linear(x))

class MyModel(torch.nn.Module):

def __init__(self):

super().__init__()

self.my_layer1 = MyLayer(10, 5)

self.my_layer2 = MyLayer(10, 5)

self.my_layer3 = MyLayer(5, 3)

def forward(self, x):

x1 = self.my_layer1(x)

x2 = self.my_layer2(x)

x3 = x1 + x2

return self.my_layer3(x3)

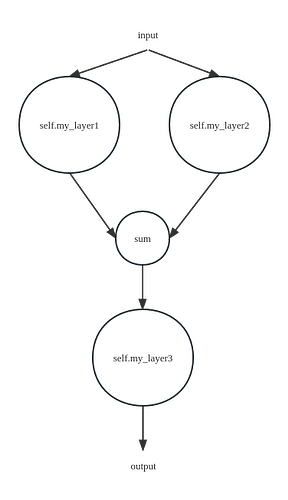

I want a graph that shows the how the input is processed between “MyLayer” instance like below:

Is there any library or method I can achieve this?