How do you extract the probabilities for say, the Top10 results of a classifier (i.e. Resnet18) when predicting the class of an inputted image?

Thanks

How do you extract the probabilities for say, the Top10 results of a classifier (i.e. Resnet18) when predicting the class of an inputted image?

Thanks

If your last activation layer is softmax, it can be interpreted as a probability distribution between all your classes that sums up to 1.

@mratsim

So, I am classifying images using the code below:

Code:

def image_loader(image_name):

image = Image.open(image_name)

image = loader(image).float()

image = Variable(image, requires_grad=True)

image = image.unsqueeze(0) #this is for VGG, may not be needed for ResNet

return image.cuda() #assumes that you're using GPU

image = image_loader('/var/www/html/' + filename)

net = torch.load('resnet50_trained3.pth')

print(net)

net.eval()

output = net(image)

print(output)

_, predicted = torch.max(output, 1)

classification1 = predicted.data[0][0]

index = int(classification1)

names = net.classes

print(names[index])

I printed out the output of the net, and got the following-what exactly is this?

I am trying to get the probability distribution for each of the classes.

Output:

Columns 0 to 9

3.6295 -3.4569 -6.6588 -3.6976 -3.2954 -4.6076 -3.3301 -4.4151 -8.7112 -3.3557

Columns 10 to 19

-4.3437 -3.2967 -3.6236 -6.1517 -2.8511 -0.3418 -2.8497 -6.0070 -6.8882 -1.3023

[torch.cuda.FloatTensor of size 1x20 (GPU 0)]

Thanks

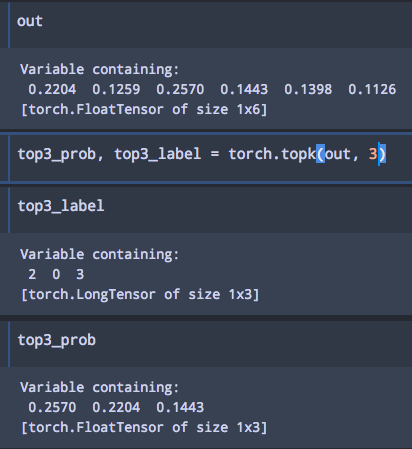

http://pytorch.org/docs/torch.html?highlight=topk#torch.topk

this function will give u the top k labels

@SherlockLiao

Right, but I was hoping to get the exact probabilities for each class. I’m already able to extract topk labels.

Thanks

u can get the probability by using

output[label] one by one maybe

@SherlockLiao @jekbradbury

I printed out the output of the net after feeding in a picture and got the following:

Columns 0 to 9

3.6295 -3.4569 -6.6588 -3.6976 -3.2954 -4.6076 -3.3301 -4.4151 -8.7112 -3.3557

Columns 10 to 19

-4.3437 -3.2967 -3.6236 -6.1517 -2.8511 -0.3418 -2.8497 -6.0070 -6.8882 -1.3023

[torch.cuda.FloatTensor of size 1x20 (GPU 0)]

There are 20 classes total, so it makes sense that there are 20 columns.

Not sure what these values signify, though.

u can use torch.nn.functional.softmax(input) to get the probability, then use topk function to get top k label and probability, there are 20 classes in your output, u can see 1x20 at the last line

btw, in topk there is a parameter named dimention to choose, u can get label or probabiltiy if u want

Can you give an example on how to use topk to extract probability?

I am able to get labels, using the following code:

_, predictedTop5_test = output.topk(5)

label1 = predictedTop5_test.data[0][0]

label2 = predictedTop5_test.data[0][1]

label3 = predictedTop5_test.data[0][2]

label4 = predictedTop5_test.data[0][3]

label5 = predictedTop5_test.data[0][4]

How do you edit this to get probabilities for each class?

@SherlockLiao

Hmm, I don’t seem to get the same topk_prob as you:

For example, this is my top5_prob printout:

Variable containing:

3.6295 -0.3418 -1.3023 -2.8497 -2.8511

[torch.cuda.FloatTensor of size 1x5 (GPU 0)]

Not sure what these values are and how I can convert the values to probabilities.

You should append F.softmax to the output of your model, or run those values through a softmax first. I’m assuming the last layer of your model is a linear layer?

@Flo is right, u need to use F.softmax to get the probability, then u can get topk probability and label like my code

I was looking around for ages trying to figure this out and couldn’t find any examples so here is what used which was stupidly simple in the end

def image_loader(image_name):

image = Image.open(image_name)

image = loader(image).float()

image = Variable(image, requires_grad=True)

image = image.unsqueeze(0) #this is for VGG, may not be needed for ResNet

return image.cuda() #assumes that you're using GPU

image = image_loader('/var/www/html/' + filename)

net = torch.load('resnet50_trained3.pth')

print(net)

net.eval()

output = net(image)

print(output) #print output from crossentropy score

sm = torch.nn.Softmax()

probabilities = sm(output)

print(probabilities) #Converted to probabilities

Really great question I have been using the below method, passing dimension into softmax is required if you’re looking to get for example class probabilities of a whole batch- (tensor <=2D) as in <=2D numpy array the dimension is required.

with torch.no_grad():

model.eval()

for data, label in tqdm(test_loader):

data = data.to(device)

label = label.to(device)

output = model(data)

sm = torch.nn.Softmax(dim=1)

probabilities = sm(output)

Hope this helps [[  ],[

],[  ]]

]]