Hi,every.

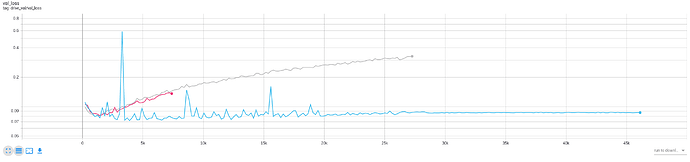

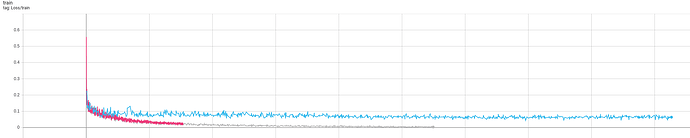

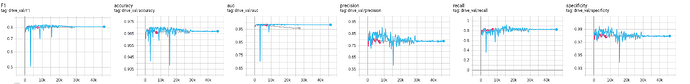

I am trying to using weight decay to norm the loss function.I set the weight_decay of Adam(Adam) to 0.01(blue),0.005(gray),0.001(red) and I got the results in the pictures.

It seems 0.01 is too big and 0.005 is too small or it’s something wrong with my model and data.

I use the unet as model and the data is DRIVE dataset.I use grayscale pictures as input and increase the dataset by CLAHE,gamma correction and flip,learning rate is 0.001.

And I always found my model get the best reslut in the beginning of the training!!

Thank you