I have read the transfer learning tutorials in the doc. but my condition is a little different. I need to add dropout for the fc layer. So how to modify it and write the forward? (another question, I needn’t to give forward in keras even I have modified the layer. so ,why is there forward in pytorch? )

If you want to add dropout / and make other changes, then copy this file: https://github.com/pytorch/vision/blob/master/torchvision/models/inception.py#L119 and change the python code of forward to insert dropout.

PyTorch models are Python programs. Keras models are graph data structures. That’s why you have a forward in pytorch.

thank you, good idea.

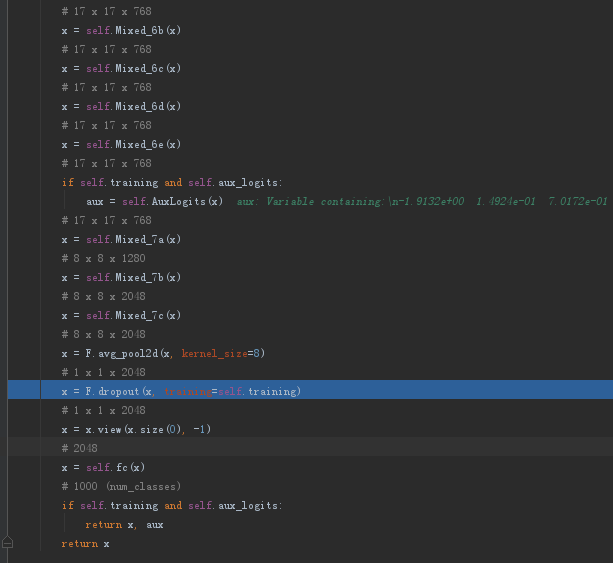

I find that there have been dropout in the fc of inception v3.

but the hint makes me confused. the size of x is constant(1x1x2048) before and after ‘x = F.dropout(x, training=self.training)’.

does the dropout take effect? and if I want to add drop out, is it ok to just set the drop rate bigger as there have been dropout in the fc layer(x = F.dropout(x, training=self.training))?