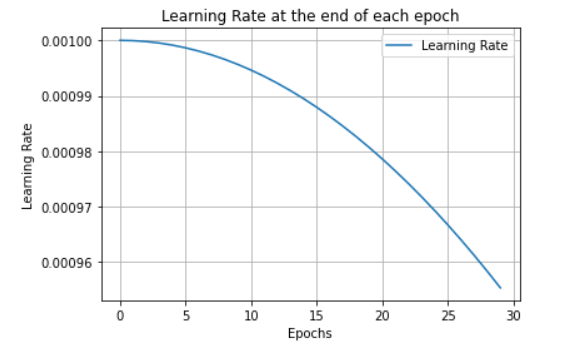

I am using cosine annealing scheduler, but it is adjusting and such a small rate.

This is how it is initialized:

lrs = torch.optim.lr_scheduler.CosineAnnealingLR(optimizer, T_max = len(train_loader))

and this is how it is being called:

`for i in range(epoch):`

`trn_corr = 0`

`tst_corr = 0`

`#lrs.step()`

`#adjust the learning rate after 30 epochs`

`#adjust_learning_rate(optimizer, i, learning_rate)`

`#Run training for one epoch`

`train(train_loader, MobileNet, criterion, optimizer, i,trn_corr)`

`#evaluate the validation/test`

`test(test_loader, MobileNet, criterion, i, epoch,tst_corr)`

`lrs.step()`

inside the training loop the optimizer is being adjusted like so:

`# Update parameters`

`optimizer.zero_grad()`

`loss.backward()`

`optimizer.step()`