Greetings! I am using Bayesian Neural Network and pytorch randomizing/sampling keeps yielding nan in some epoch. Does anyone know how to fix this? I have tried:

Trial 1: torch.empty(120).uniform_(-.01, 0.01)

Trial 2: torch.randn(120).uniform_(-.01, 0.01)

Trial 3: torch.distributions.Uniform(-.01, 0.01).sample((1, 120))[0]

Trial 4: torch.torch.distributions.Uniform(-.01, 0.01).sample((1, 120))[0]

I also even tried like this for all the above trials torch.nan_to_num(torch.torch.distributions.Uniform(-.01, 0.01).sample((1, out_features))[0], nan=torch.tensor(0.0037)), but this is not gonna work in the training loop. In particular, it only took into account the part torch.torch.distributions.Uniform(-.01, 0.01).sample((1, out_features))[0] and still yielded nan.

The results I got with nan might not be reproducible with the examples of trials I gave here. Please let me know if you need to know deeper to reproduce the results with nan.

This is the result in some epoch I tried with torch.torch.distributions.Uniform(-.01, 0.01).sample((1, 120))[0] and yielded nan

tensor([-1.4659, 0.3137, 0.1822, 0.1657, 0.2099, -0.1929, 0.2386, 0.4739,

0.2335, 0.2515, 0.2949, 0.2874, 0.1460, -2.9367, 0.6829, 0.2574,

0.4183, 0.3023, 0.4242, -1.0063, 0.3041, nan, 0.2051, 0.1333,

0.3006, 0.2397, 0.2347, 0.1516, 0.3593, 0.2641, 0.1562, -0.5621,

-0.2820, 0.3090, 0.1144, 0.5003, 0.3033, 0.1153, 0.3229, 0.4727,

0.0221, 0.2196, 0.3357, 0.1594, 0.3025, -0.3293, 0.0314, 0.1627,

0.0971, -0.7528, 0.3589, 0.2749, 0.3002, 0.1839, -0.5211, -0.5340,

0.2862, 0.3999, 0.1999, 0.0734, 0.3181, 0.1325, -0.6105, 0.2866,

0.2635, 0.2449, 0.1772, 0.2520, -4.4859, 0.4437, 0.2694, 0.1909,

0.4727, 0.2047, 0.3235, 0.4658, -1.2267, 0.1527, 0.0942, 0.5141,

0.6169, 0.2492, 0.0831, 0.0781, 0.0855, 0.1781, 0.1907, 0.5520,

0.3903, 0.1470, 0.3136, 0.3869, -7.8619, 0.1830, 0.1720, 0.3044,

0.1460, 0.3055, 0.1487, -0.0105, -2.5014, 0.0465, -0.2051, 0.2039,

-4.9786, 0.3331, 0.3496, -0.0930, 0.2421, -3.2148, 0.2964, 0.2464,

0.3046, 0.2449, 0.2755, 0.3433, 0.3893, 0.3142, 0.0477, 0.1953])

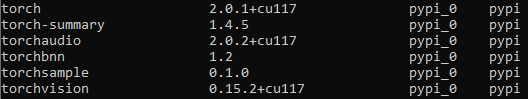

The versions of packages relating to pytorch are here:

It has already taken me nearly a month to find solutions for this but still not successful! So I guess I should ask for help here. Please help me with this. Thank you very much.